+HUMAN DESIGN LAB

Experiments with Generative AI

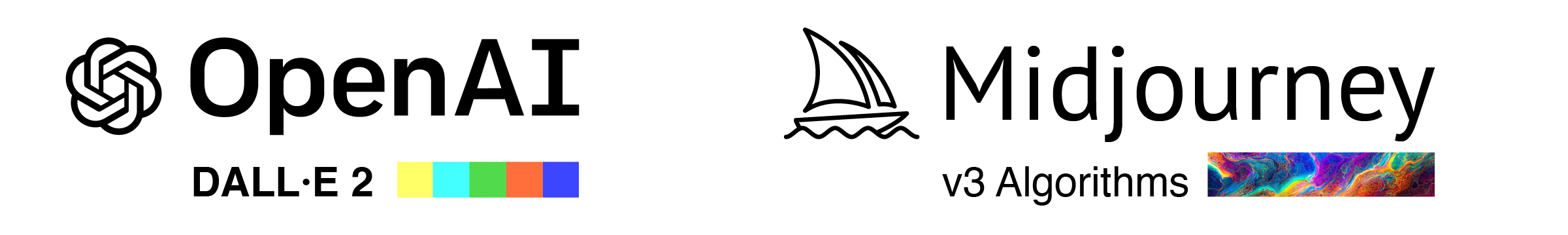

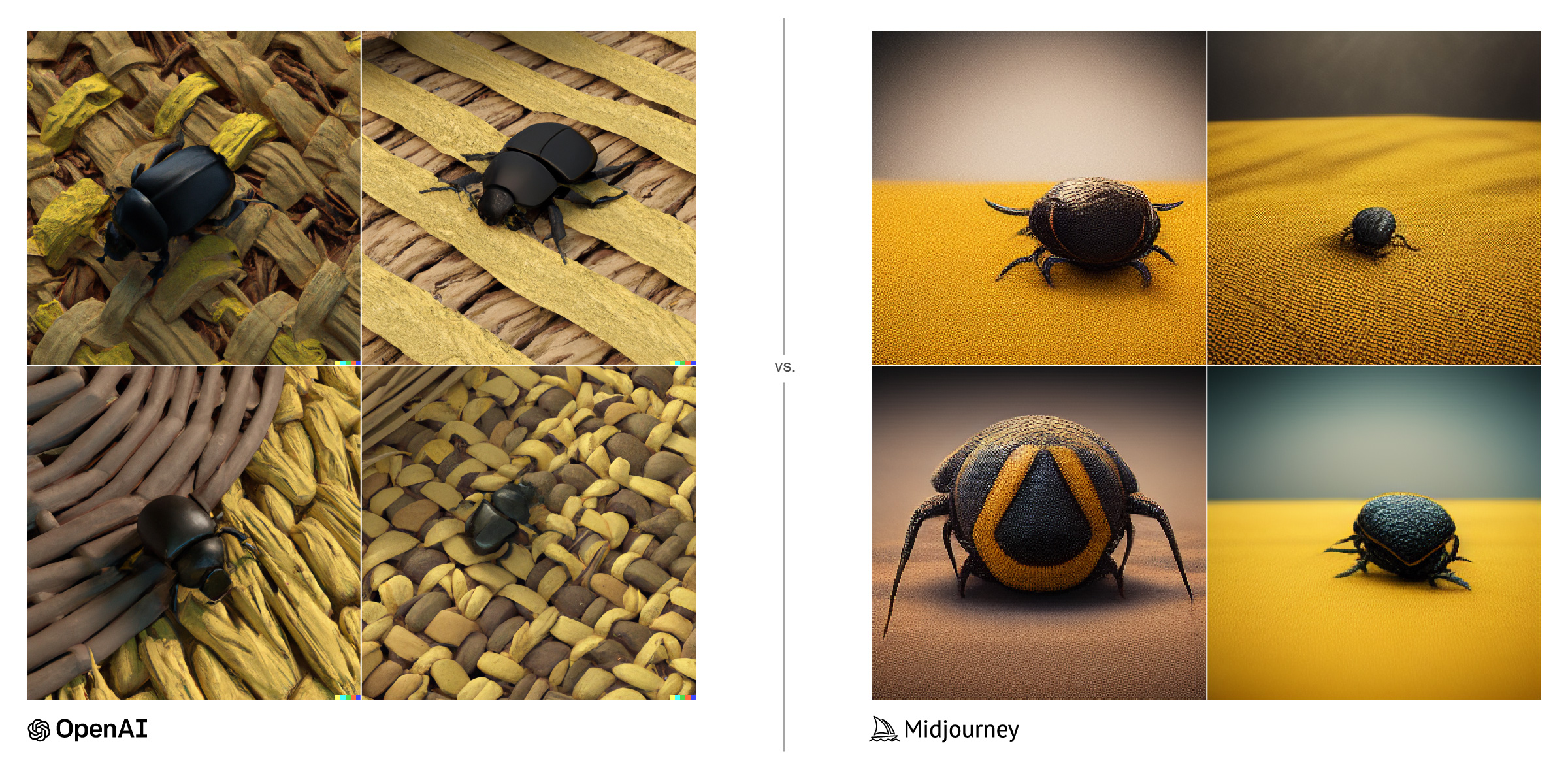

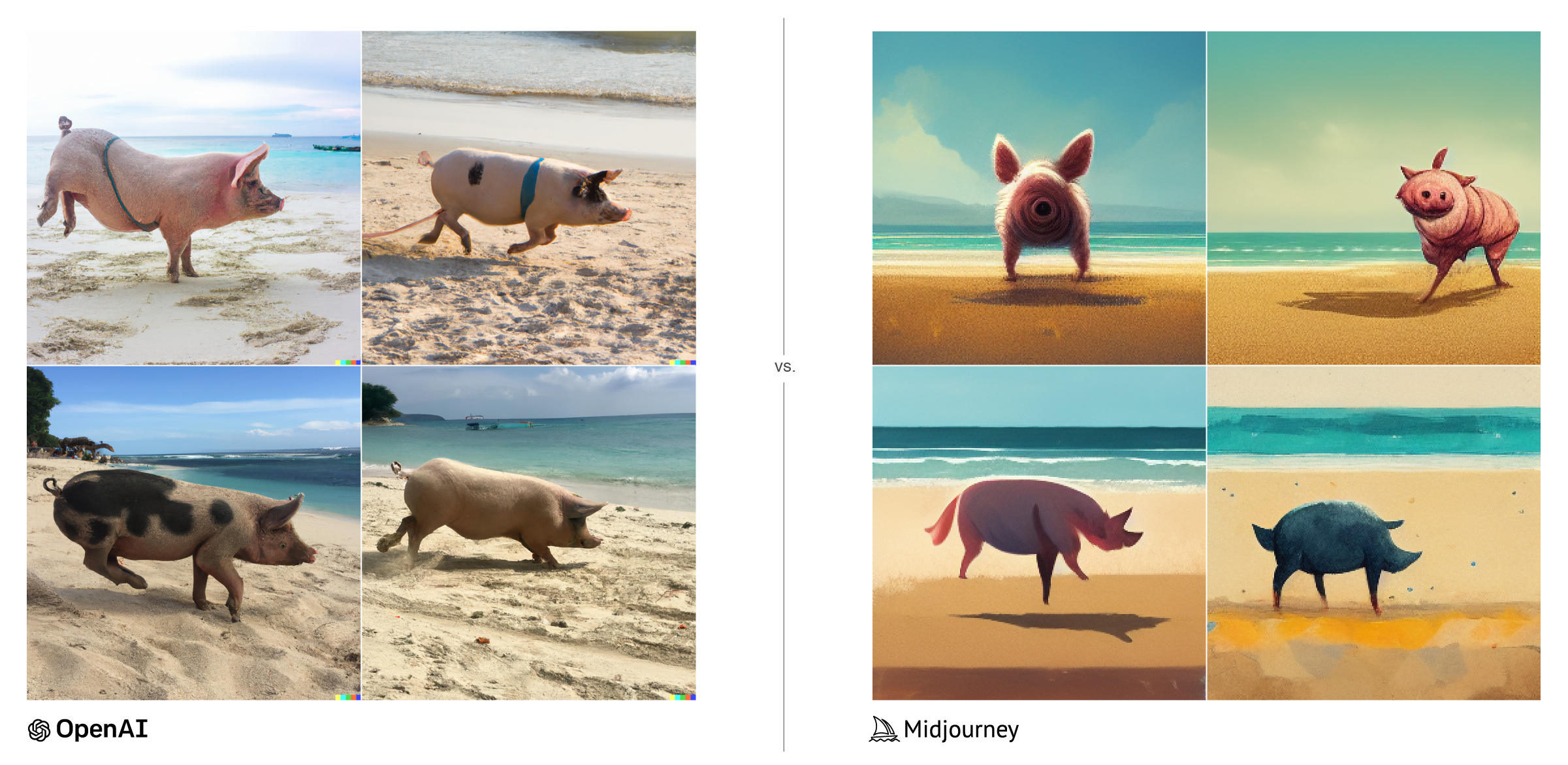

Exploring the capabilities, limitations, differences, bias and real-world potential uses for DALL·E 2 and Midjourney artificial intelligence systems.

The future looks bright, perhaps thanks to the three Suns?

These past few days, we’ve been tinkering with two AI engines.

DALL·E 2 is an artificial intelligence system that can create realistic images and art from a description in natural language. It is part of a larger OpenAI set of models.

Midjourney is a similar AI program that creates images from textual descriptions. The team and lab are independent and self-funded, focusing on design, human infrastructure, and artificial intelligence.

The experiments

We experimented to explore the capabilities, limitations, differences, bias and real-world potential uses for both these incredible tools.

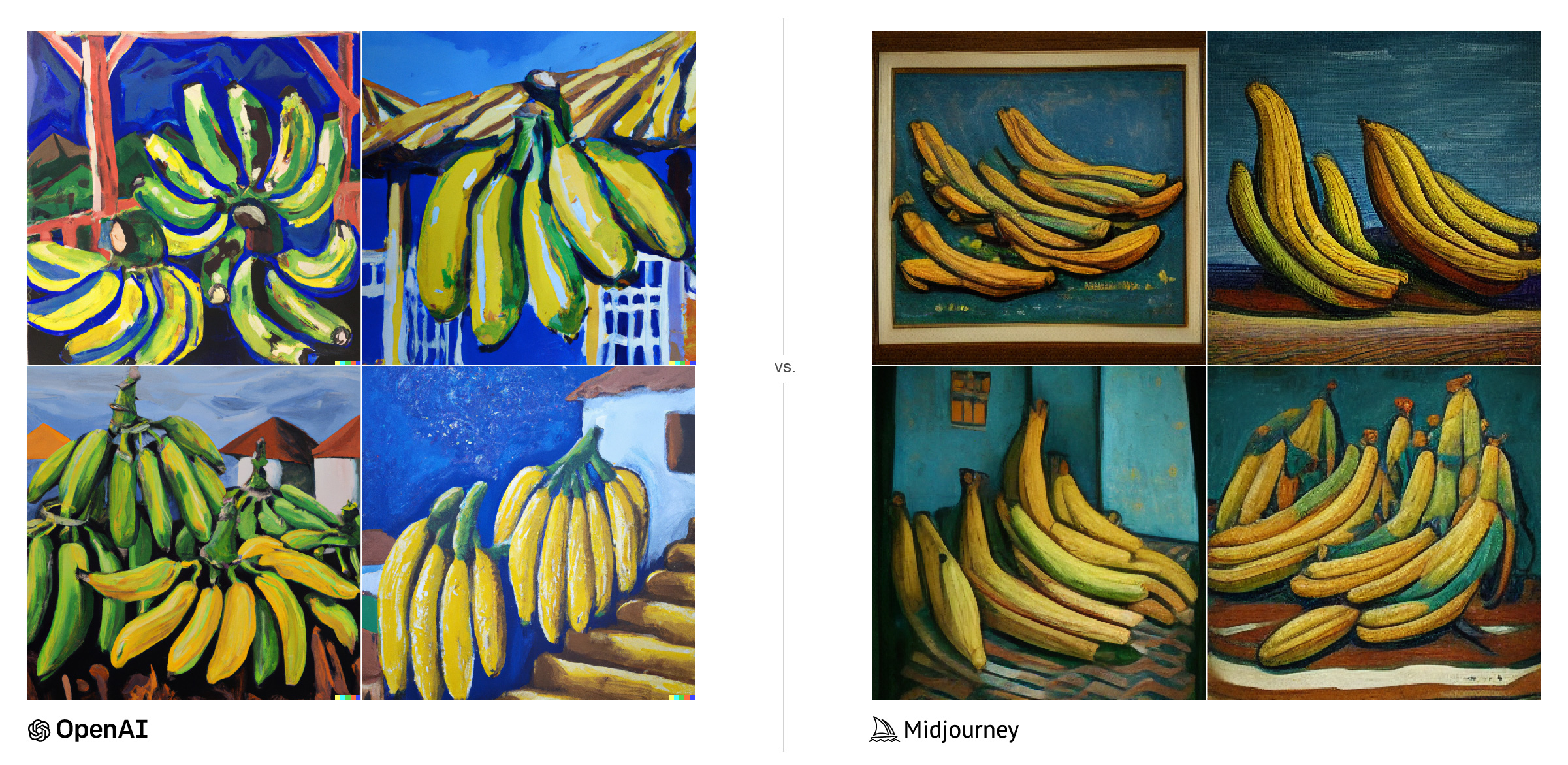

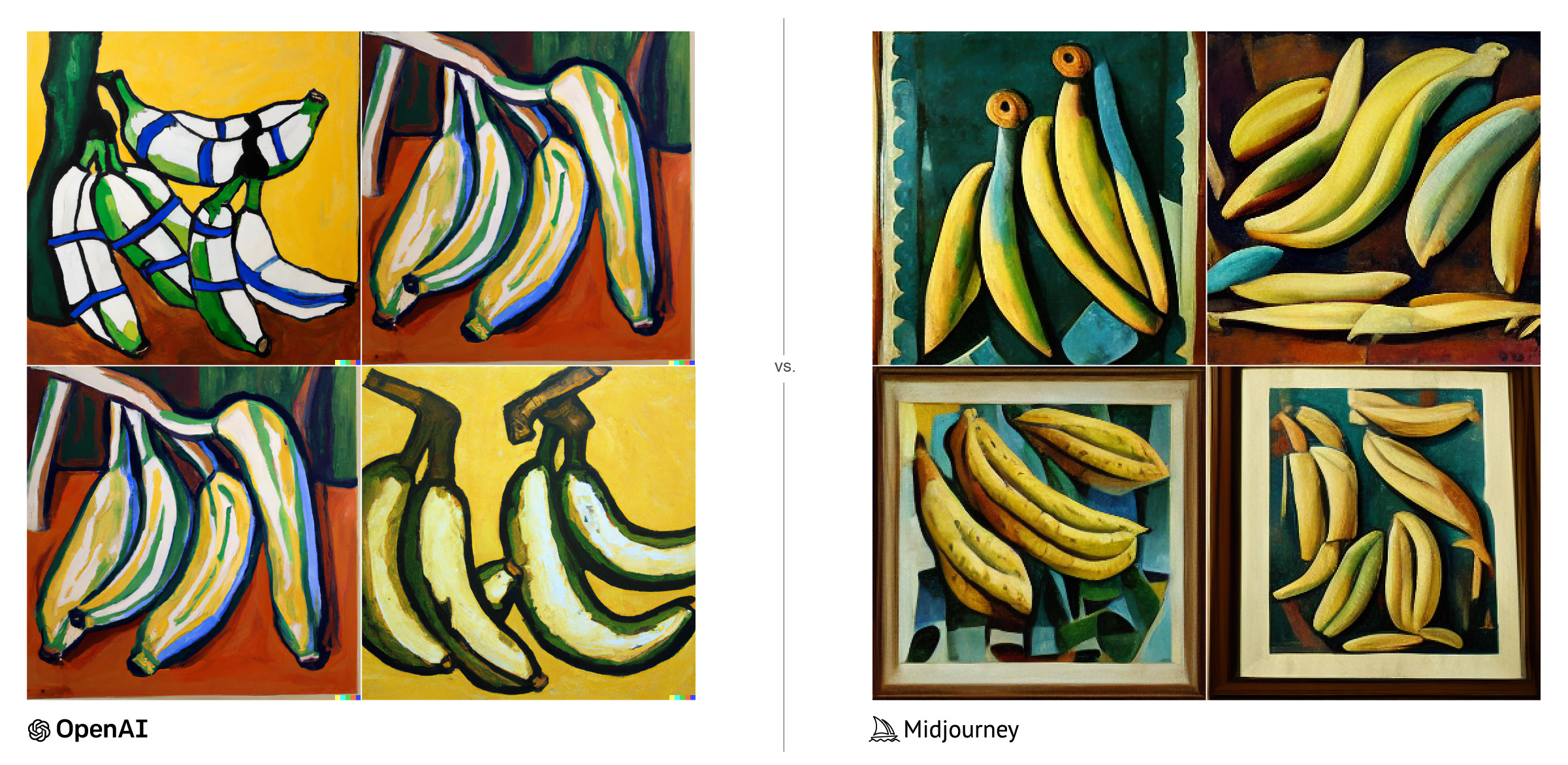

At first, we started with common and well-understood general tasks to calibrate our own “machine speak”, then we moved closer to home with more local context challenges – places, things & topics more relevant to our part of the world.

A little fun to start us off…

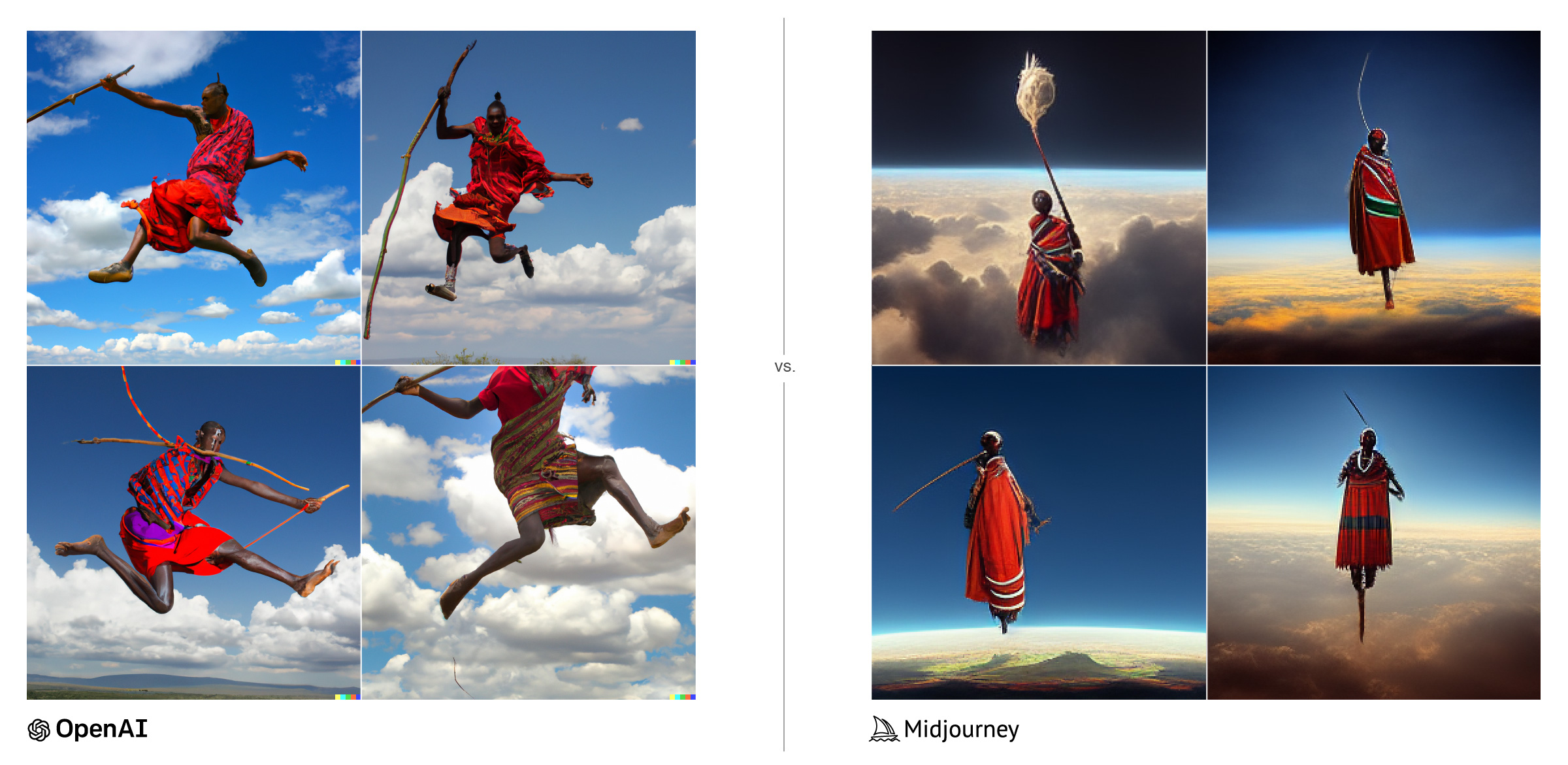

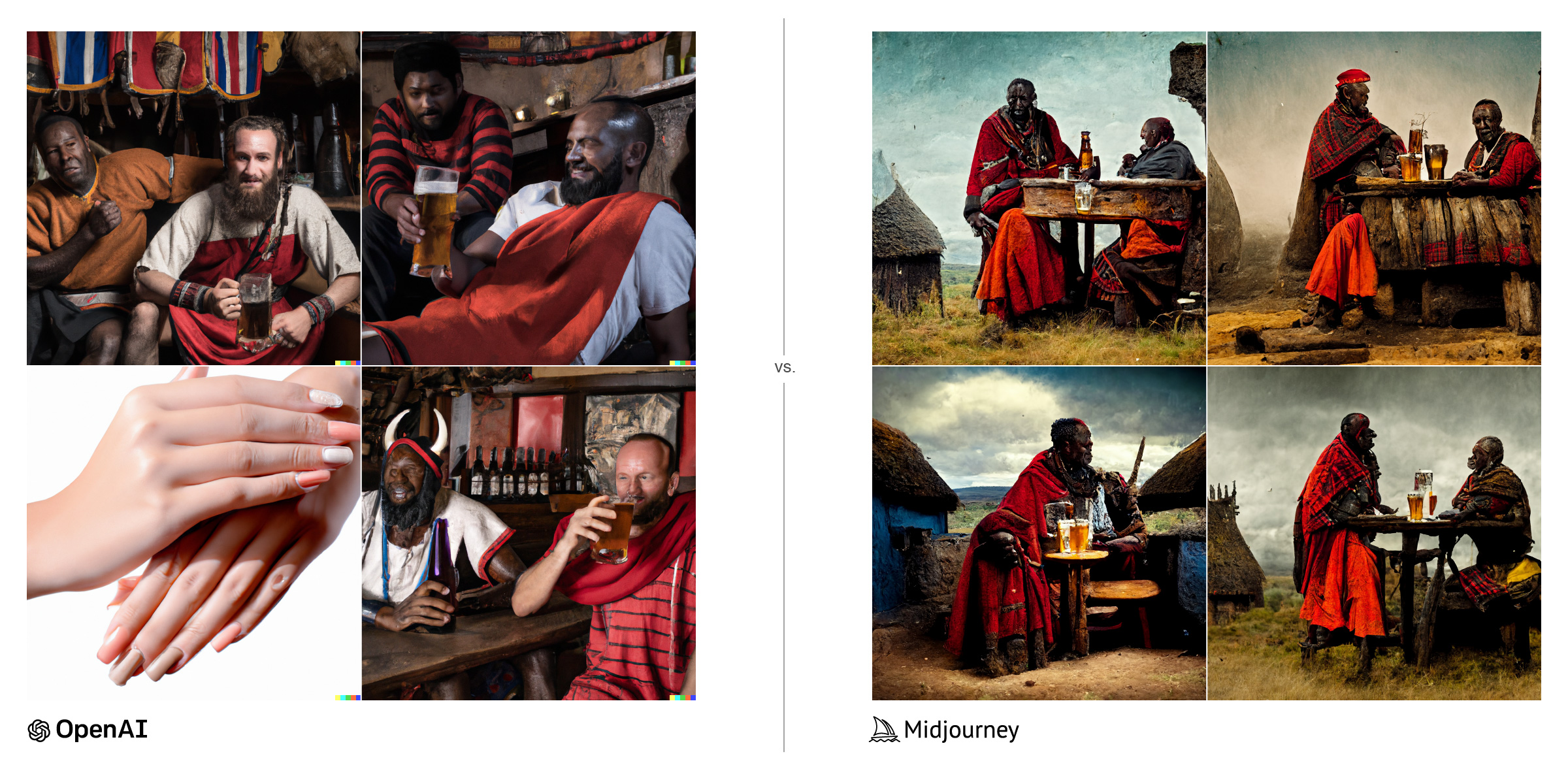

Let’s start presenting challenges closer to East Africa

People and faces

(they obviously became best buds and exchanged clothing at some point).

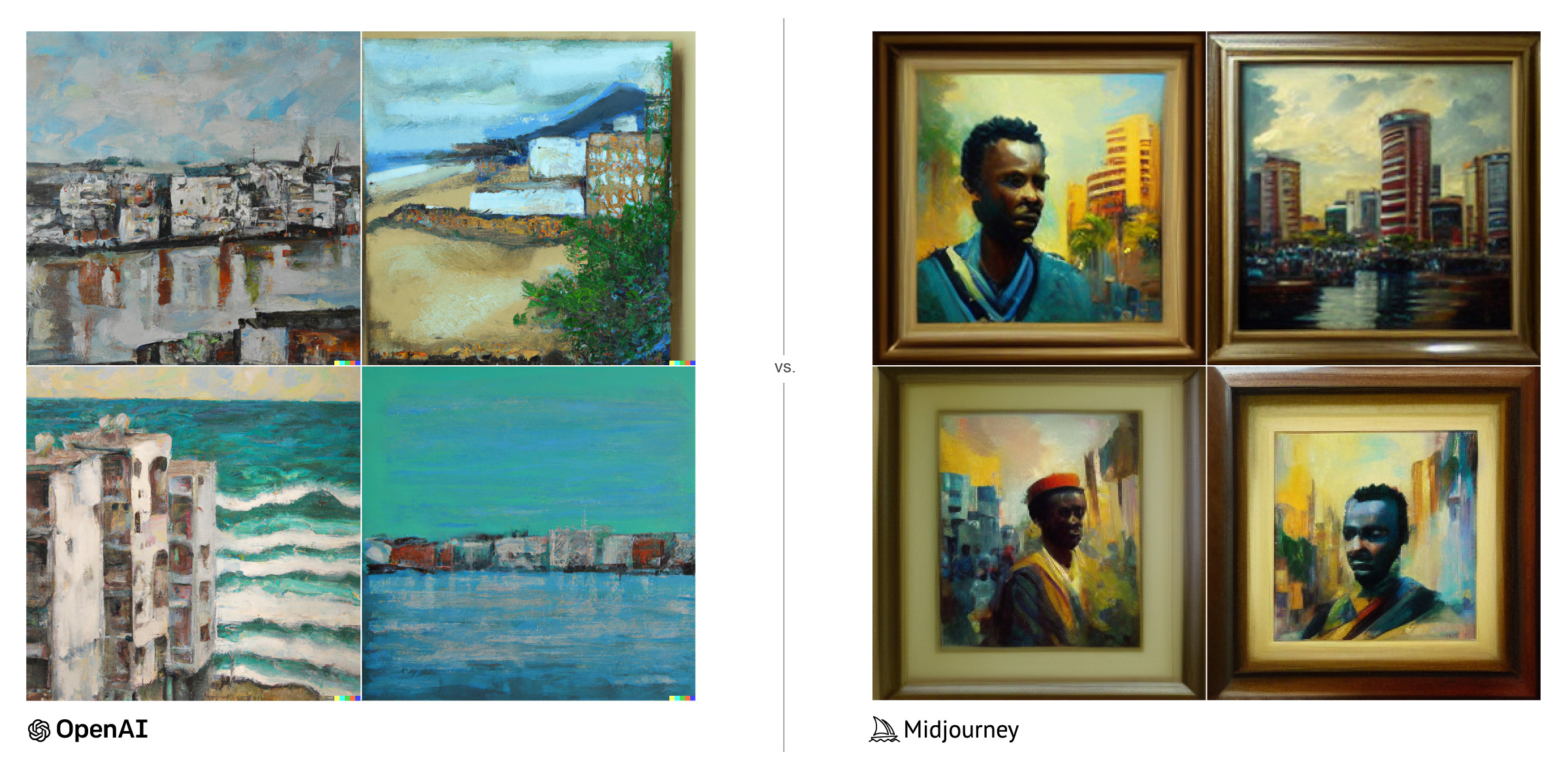

Dialling up an artistic render component

DALL·E 2 must have picked Luanda (capital of Angola) but Midjourney was kind enough to paint a face there as well. Dope.

And finally, some spaces and landscapes

Did you think Timbuktu was an imaginary place? Google it then come back, we’ll wait 🙂

Results

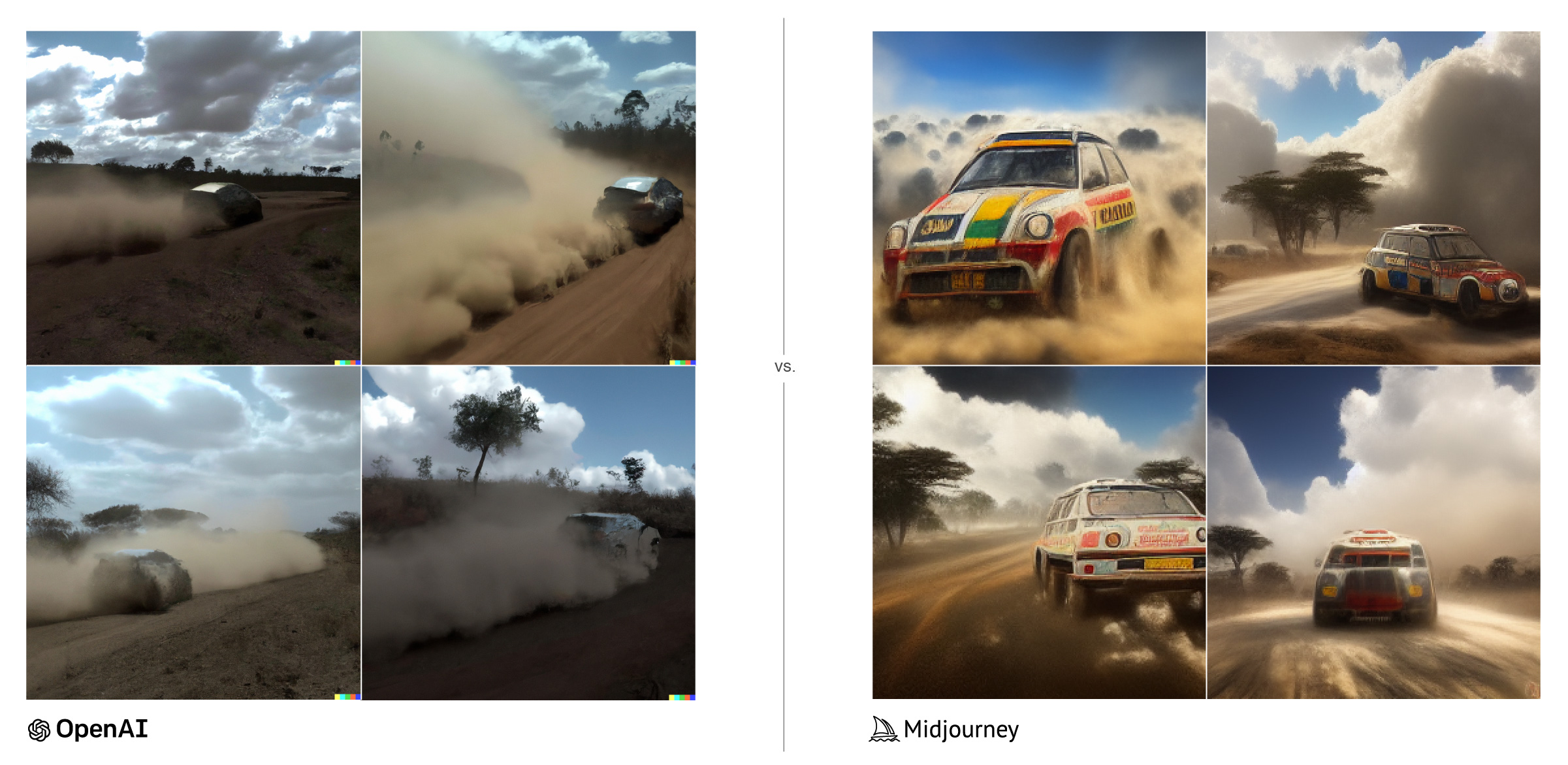

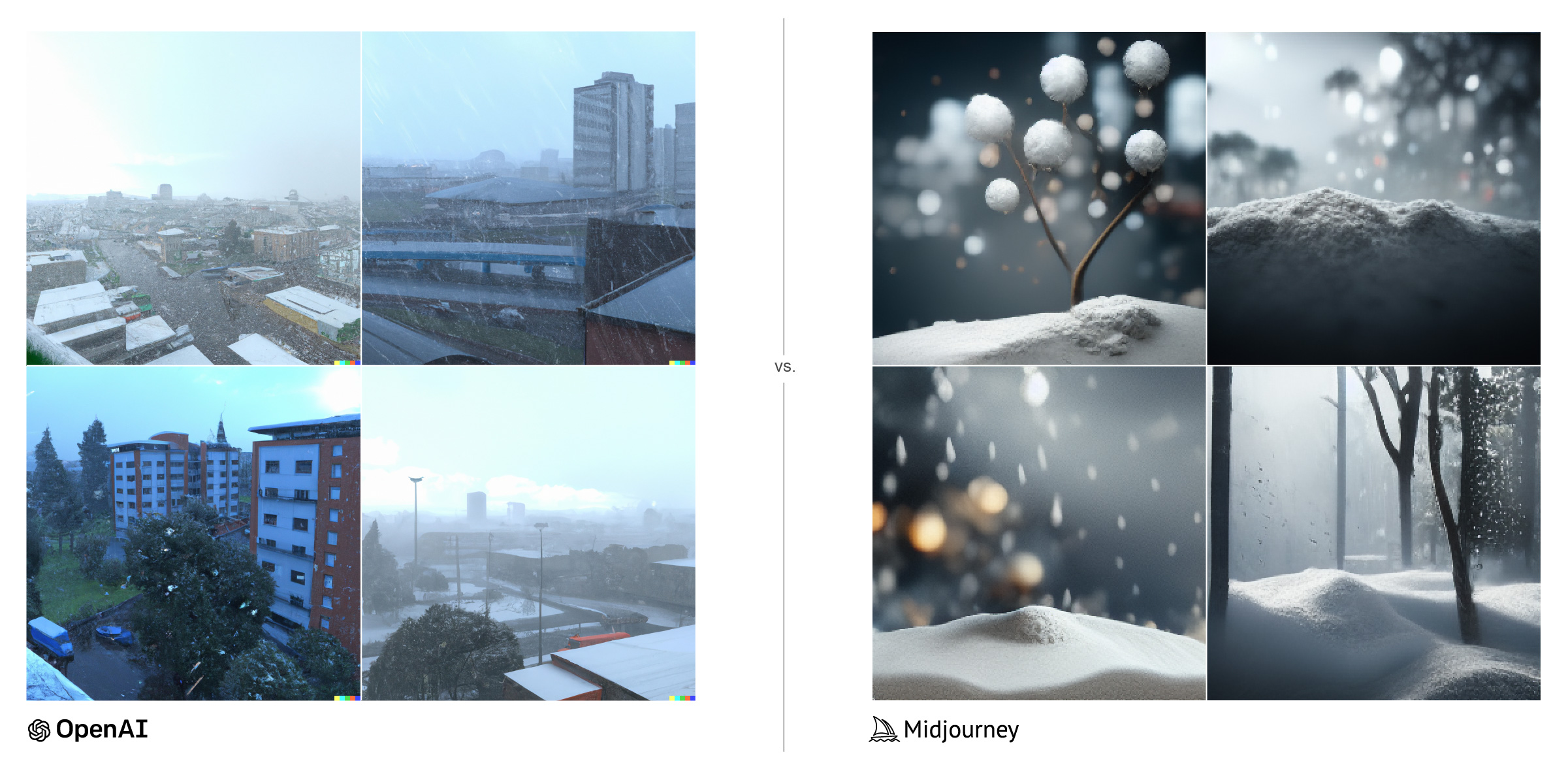

DALL·E 2

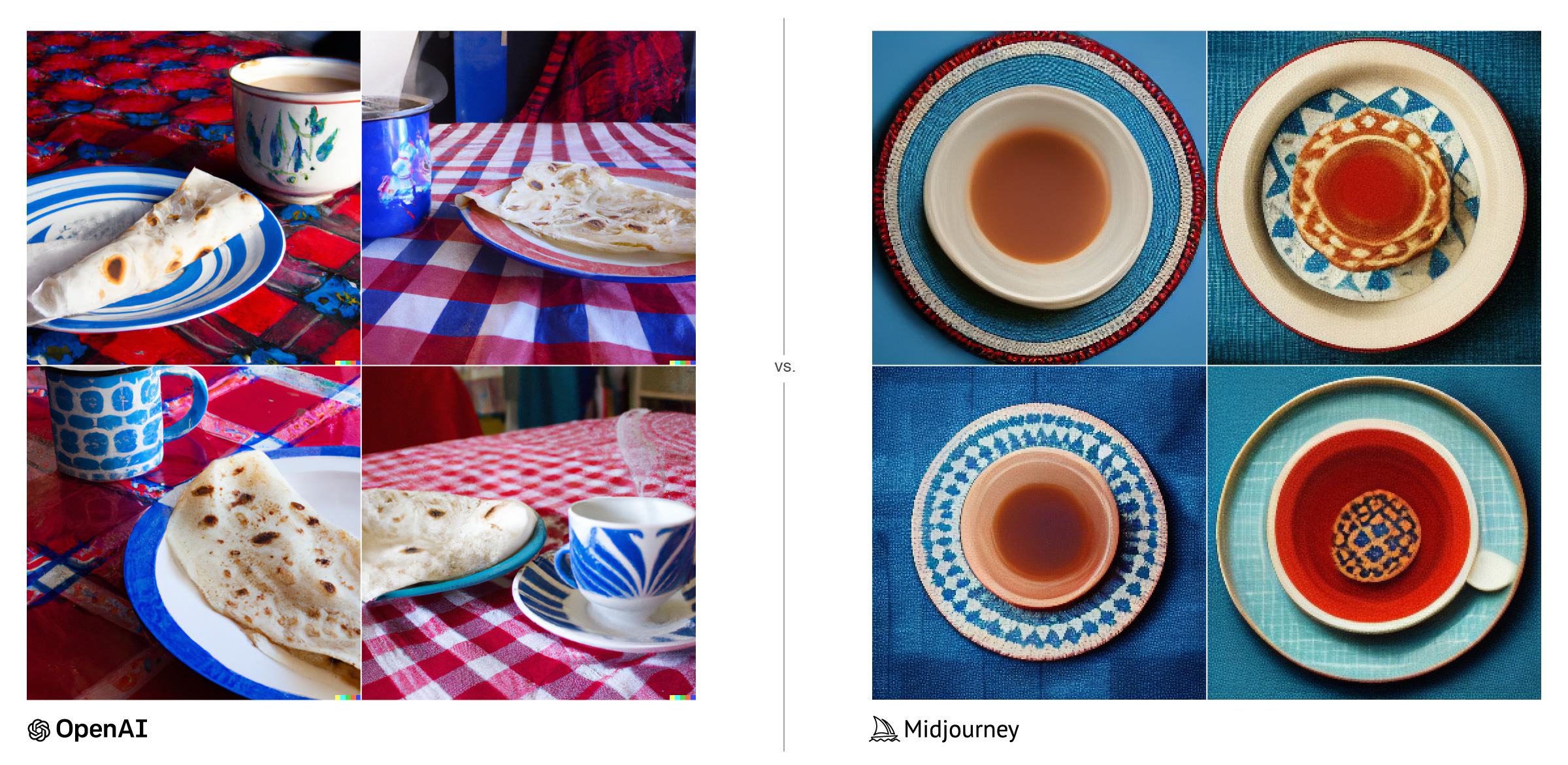

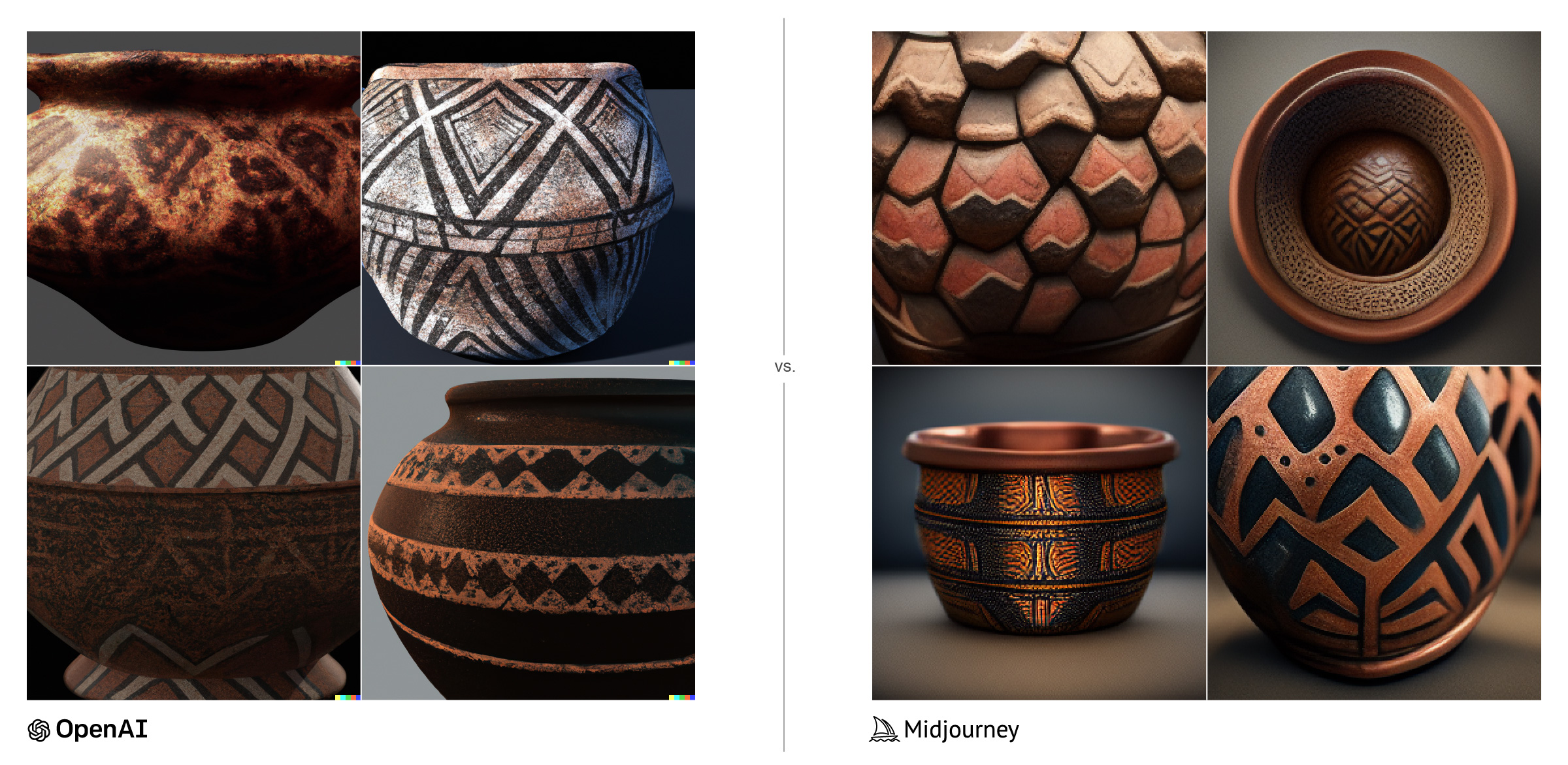

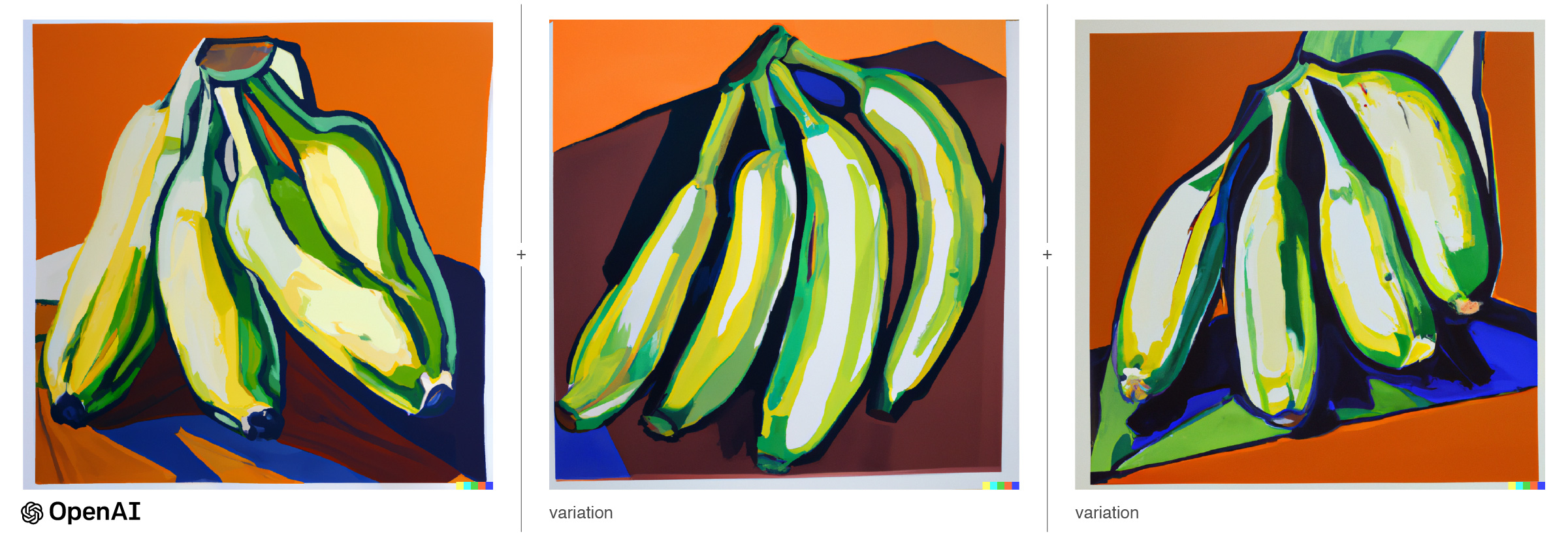

We soon realised that DALL·E 2 often had wider knowledge of unique datasets and “understood” natural language and the intent behind our queries better, presenting more expected and accurate objects, places and hyper-realistic faces and forms.

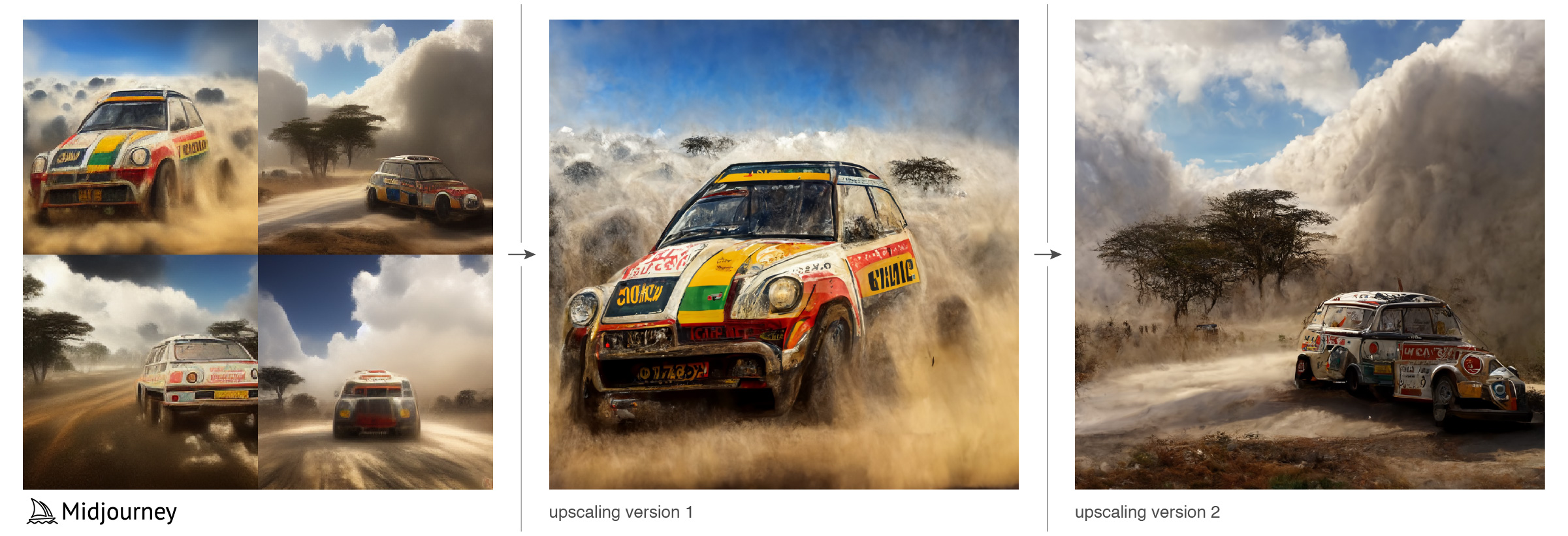

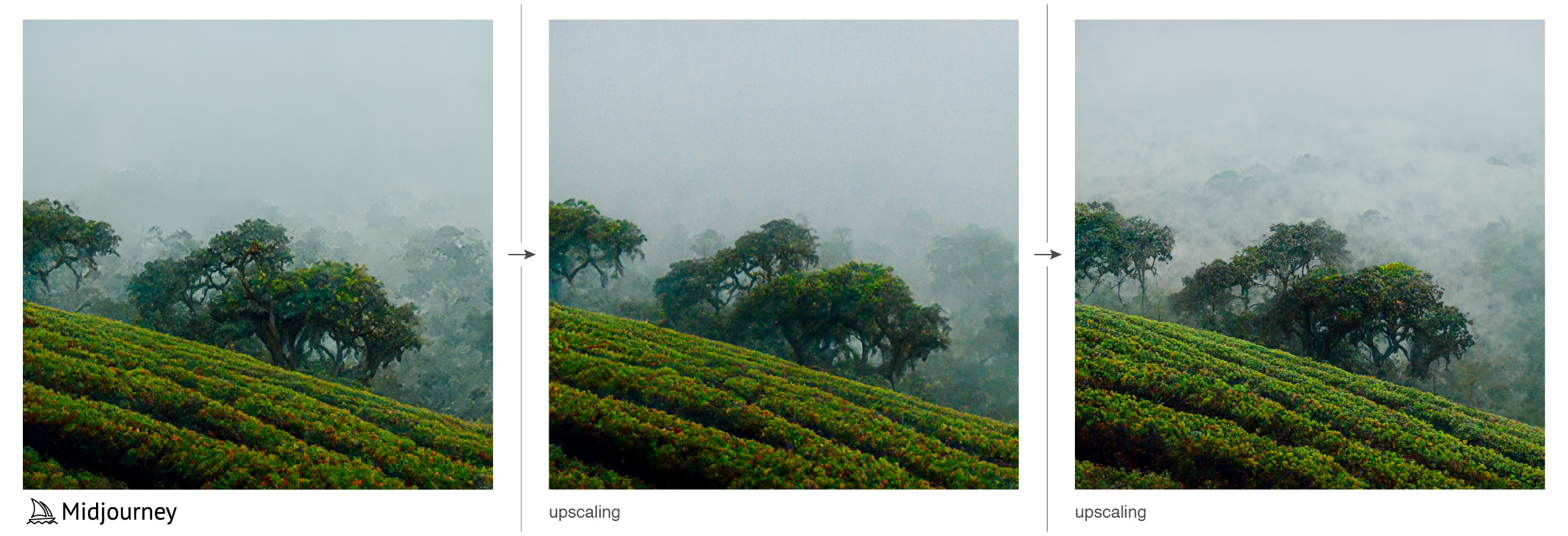

Midjourney

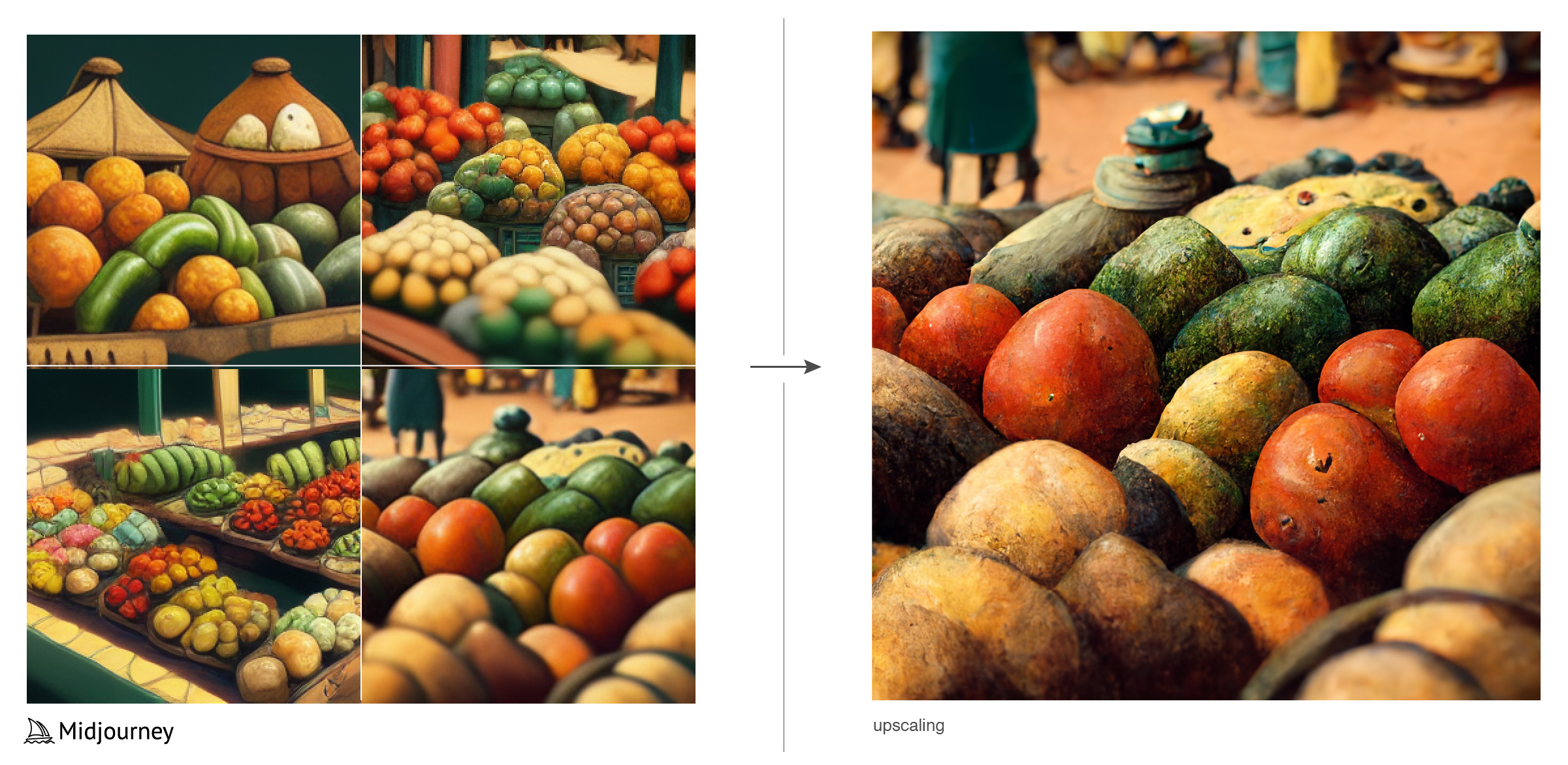

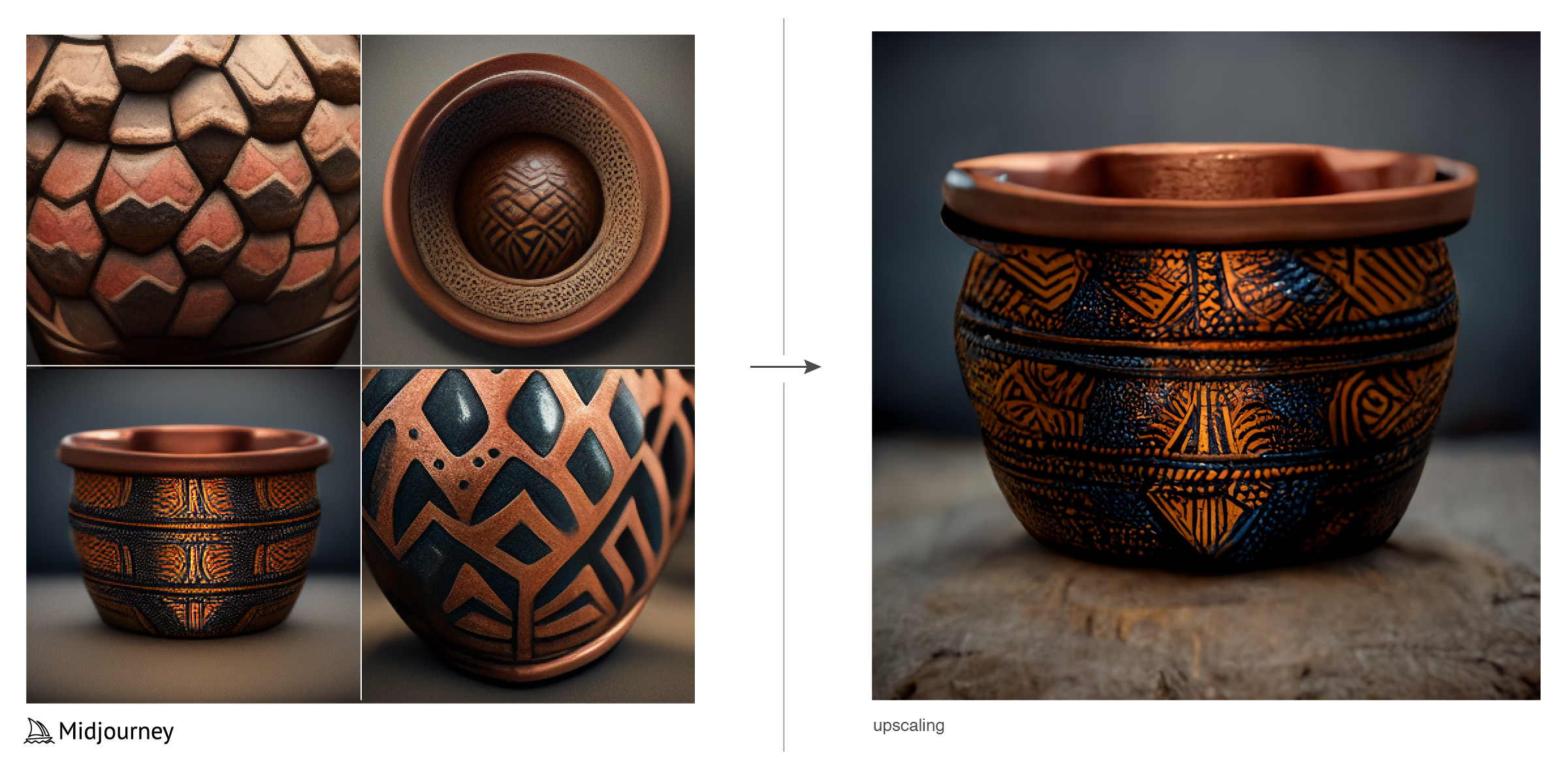

Midjourney on the other hand wouldn’t always understand certain terms and themes local to East Africa, but its results were more imaginative and its image processing power was phenomenal at quickly producing extremely polished spatial forms, landscapes and artistic lighting environments.

What’s next?

In Part II of this series, we’ll expand on the generative processes behind these engines and discuss potential use-cases in our design industry and the creative economy.