+HUMAN DESIGN LAB

Generative AI – the good, the bad and the spooky.

We started tinkering with Generative AI tools like Midjourney and Dall·E in July 2022, and a few months later with ChatGPT. They've been incredibly interesting tools to work with and have assisted us in our day to day work. Today, we take a step back and look a little deeper at what's changed, what's working, what's not, and what the future may hold for such AIs as they take on newer and more powerful capabilities.

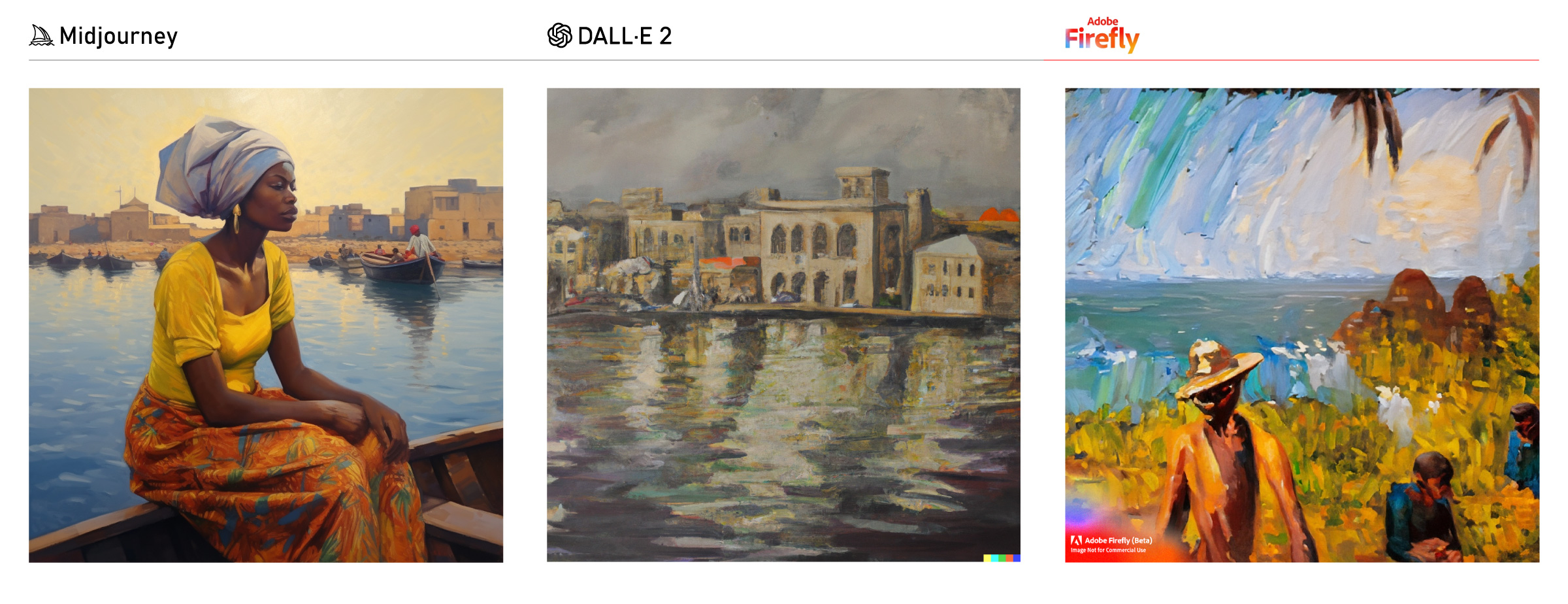

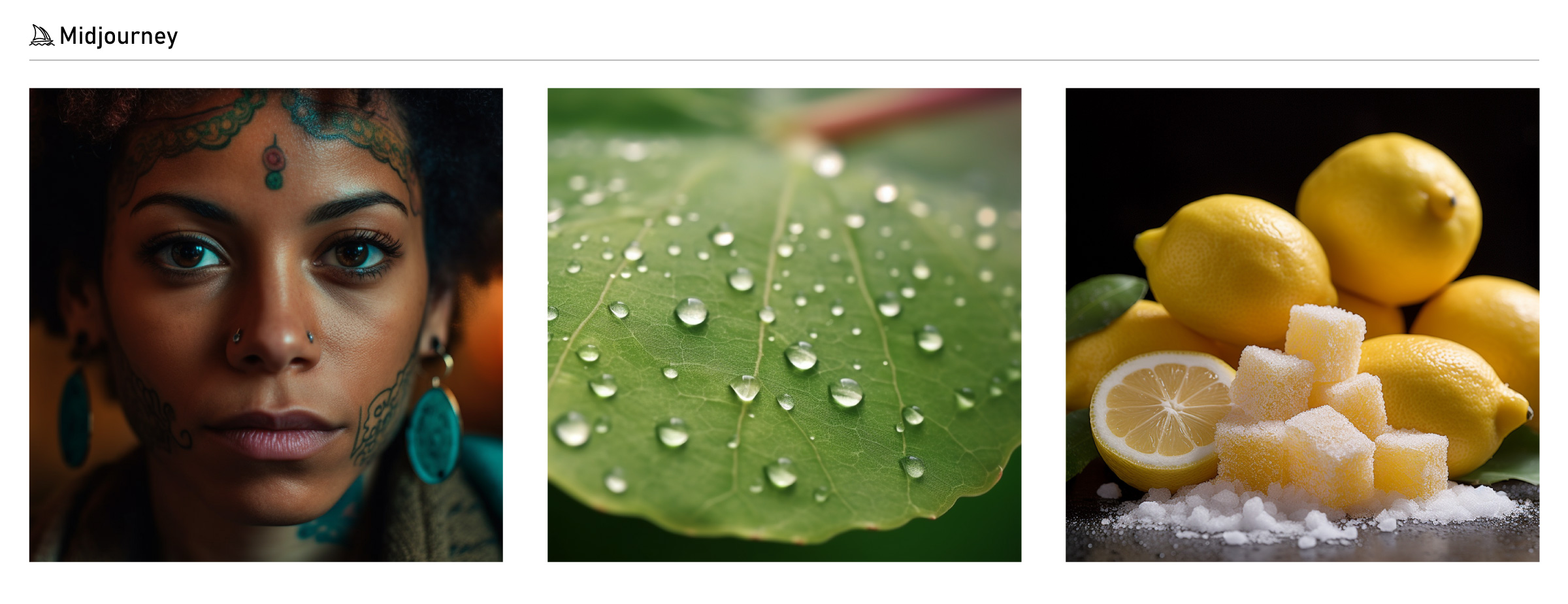

About a year ago, before “AI” became the buzzword it is today, we did a deep-dive experiment exploring what was possible with generative AI at the time. We tinkered with two AI engines, namely, Dall·E 2 and Midjourney v3, to test their capabilities, limitations, differences, bias and real-world potential uses. The results were, in a word, incredible.

The Evolution

What’s Changed?

11 months on, the technology powering generative AI has rapidly evolved. The capabilities of AI engines have surpassed the expectations of what the world thought would be possible in such a short space of time. Here at ARK, having integrated a number of these AI tools into our everyday workflow, we have been privileged to witness this evolution first-hand.

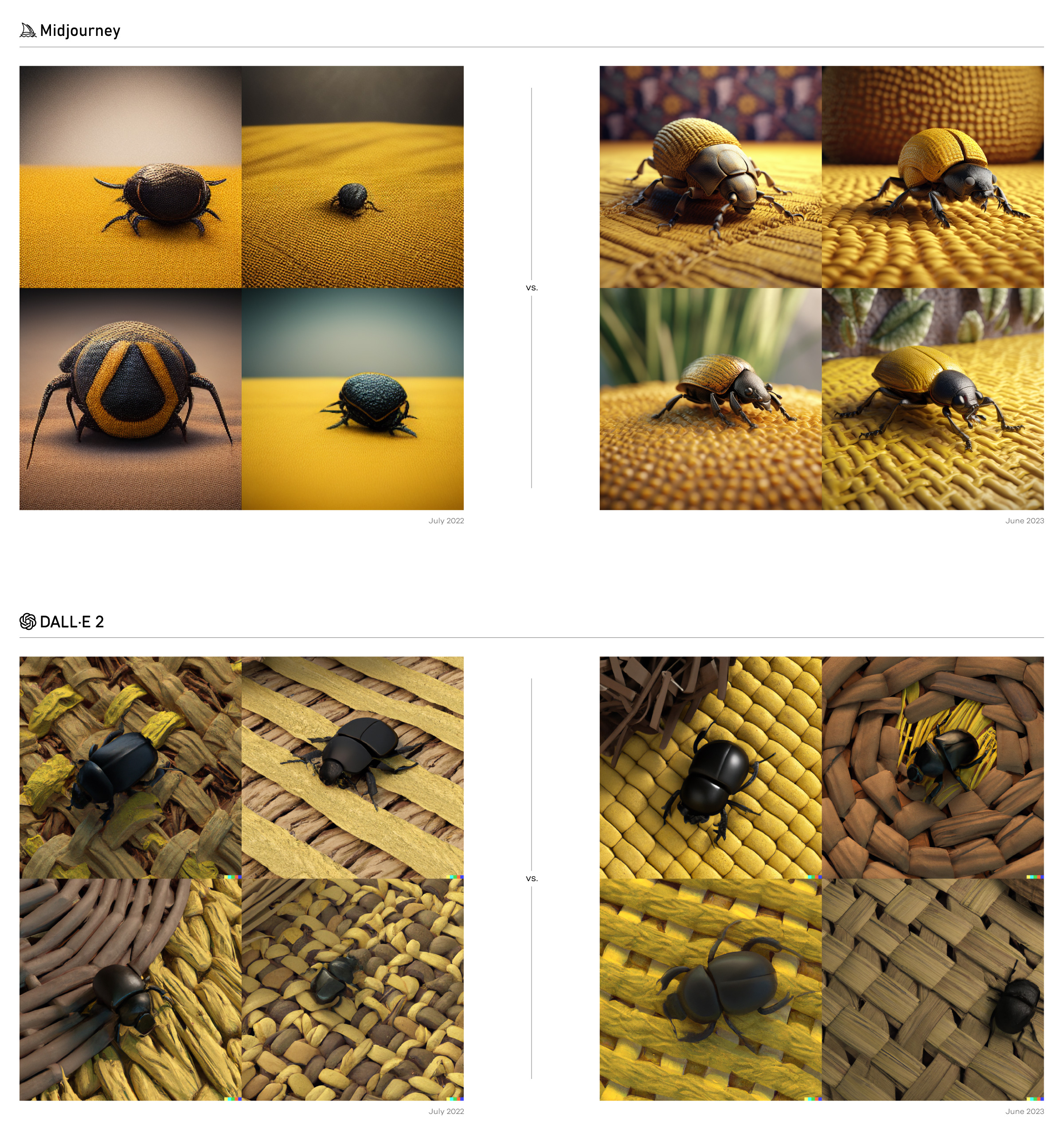

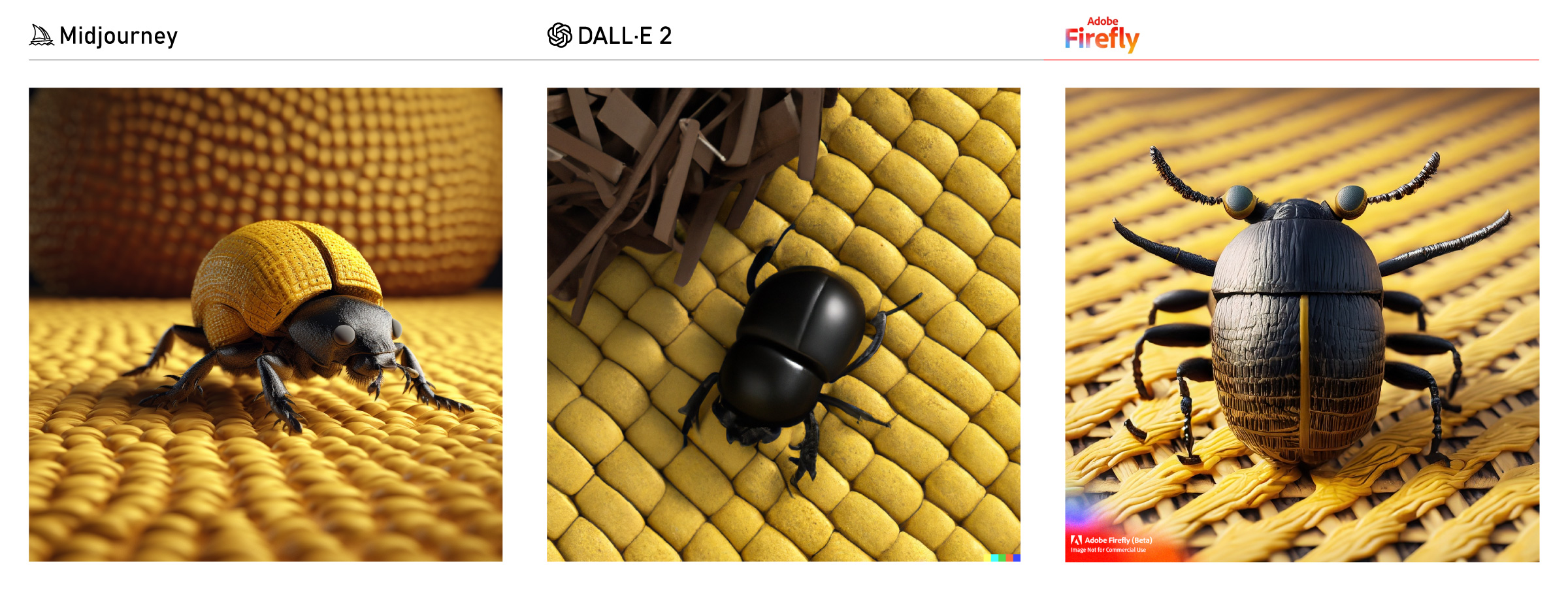

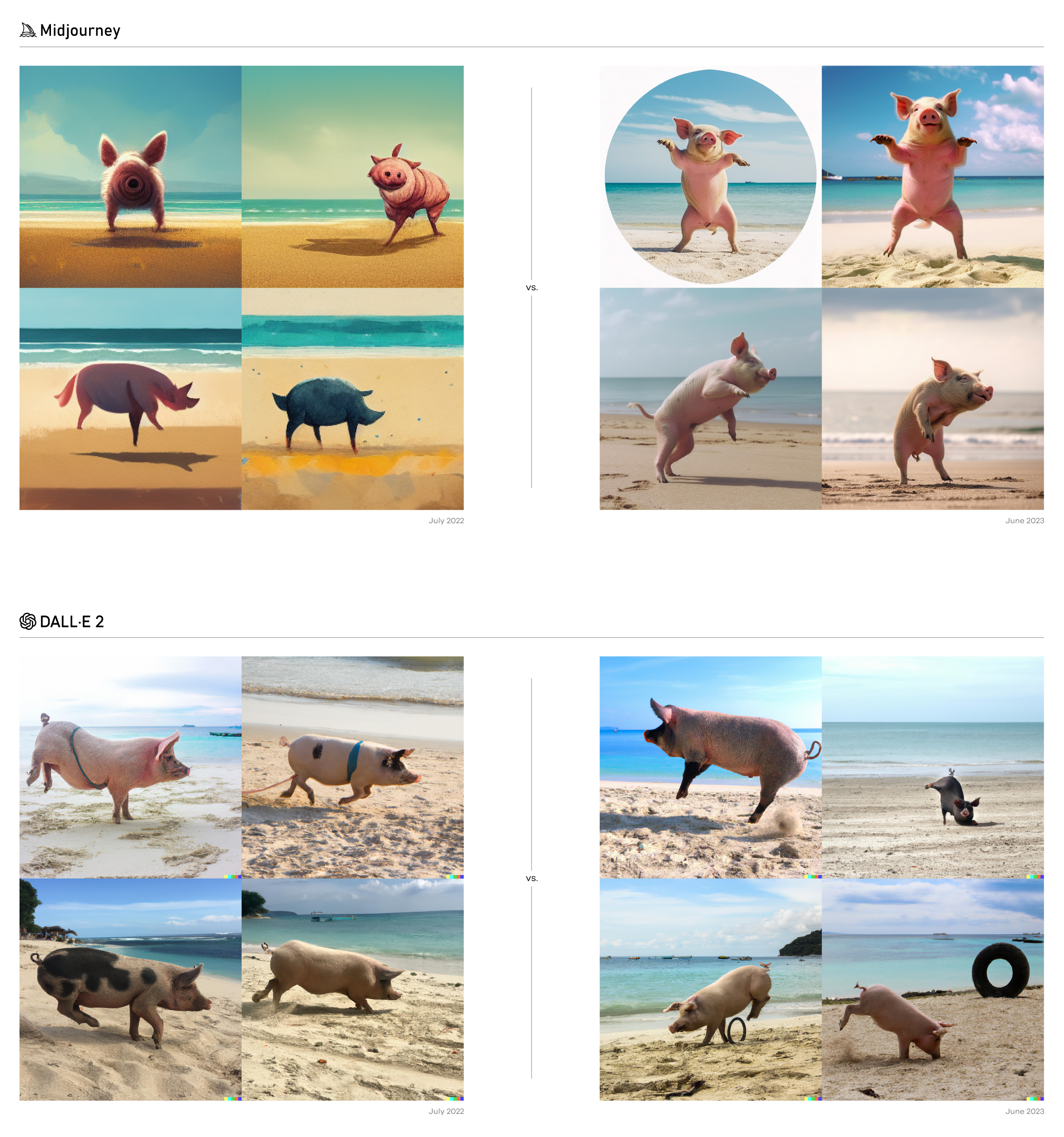

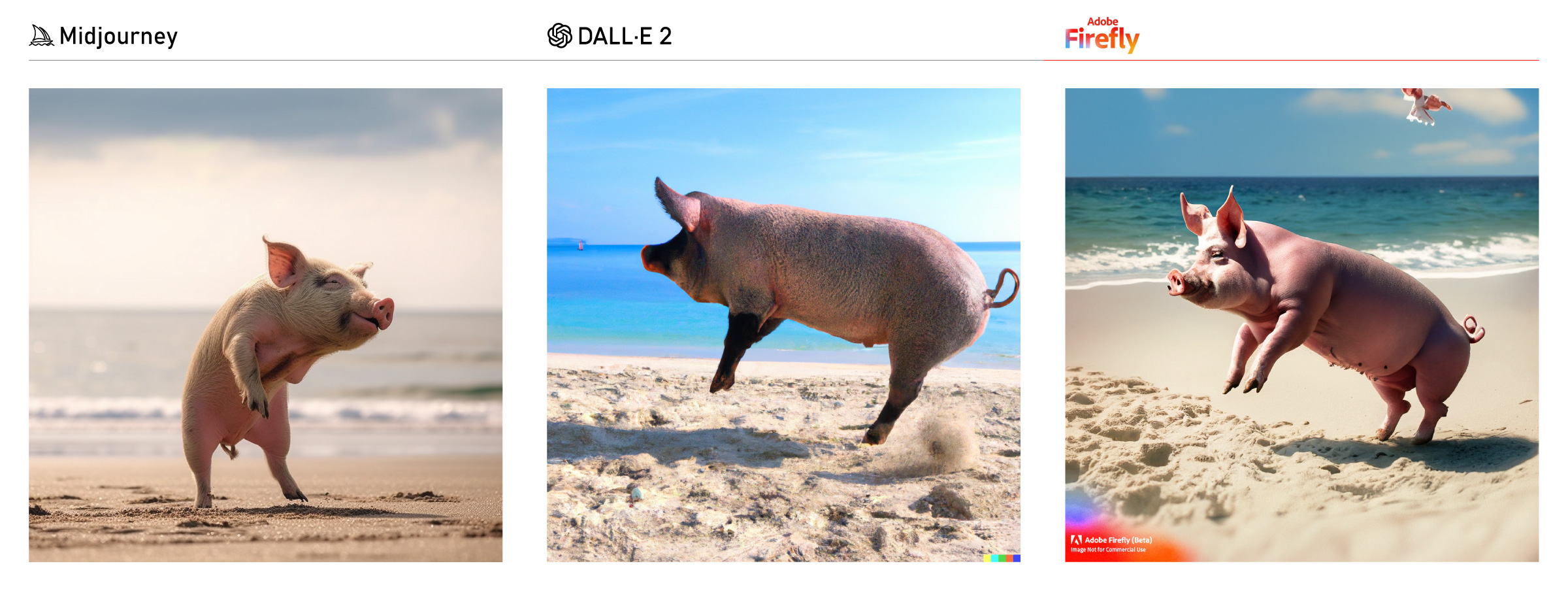

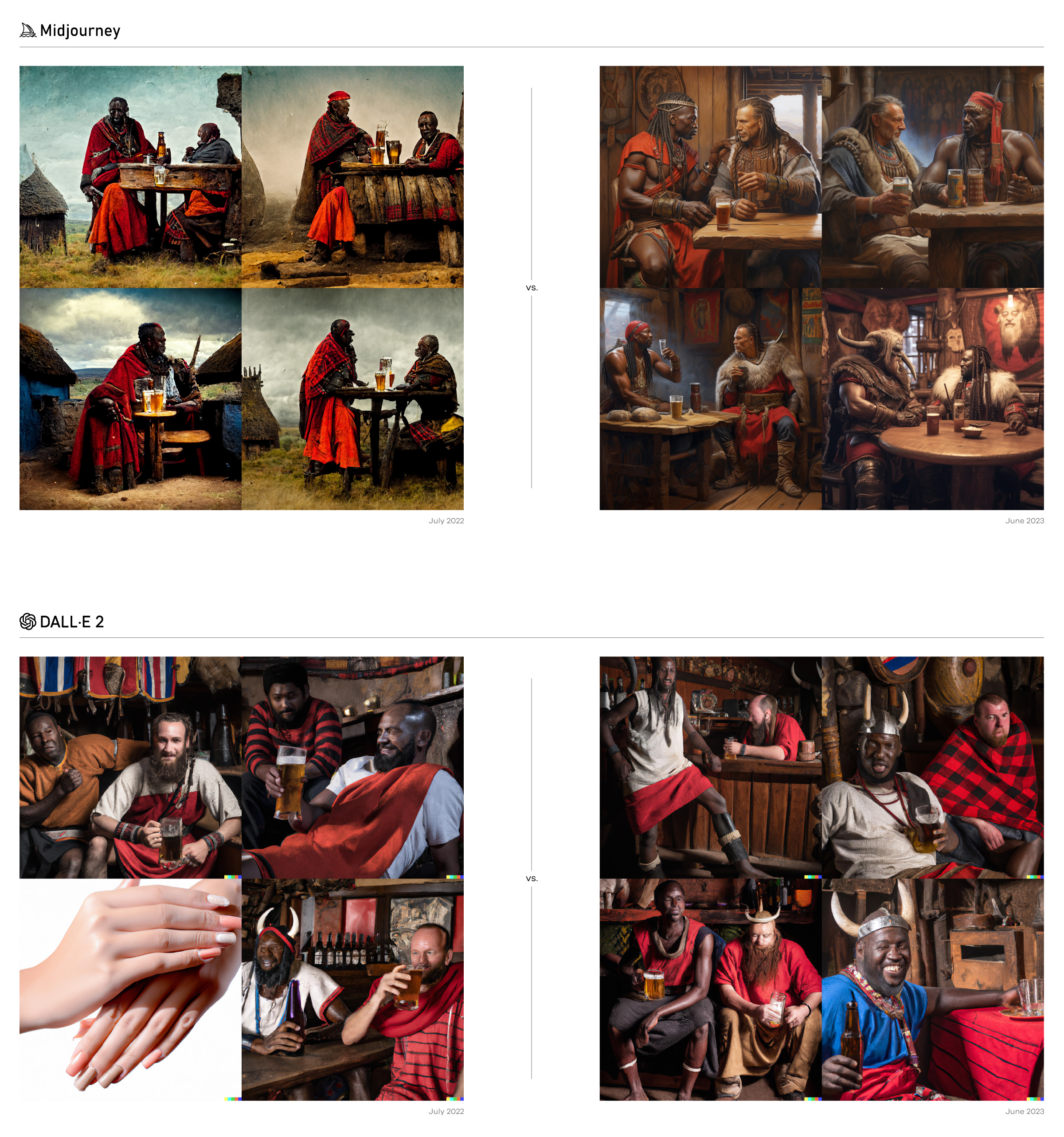

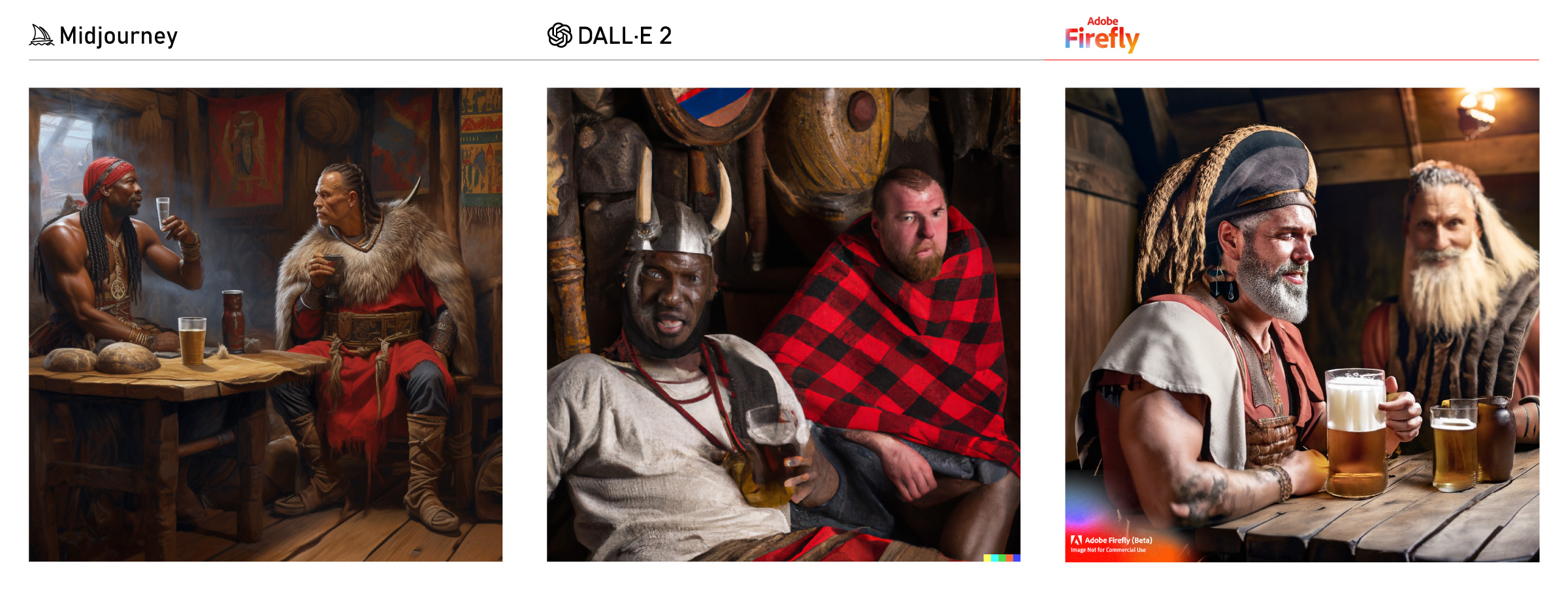

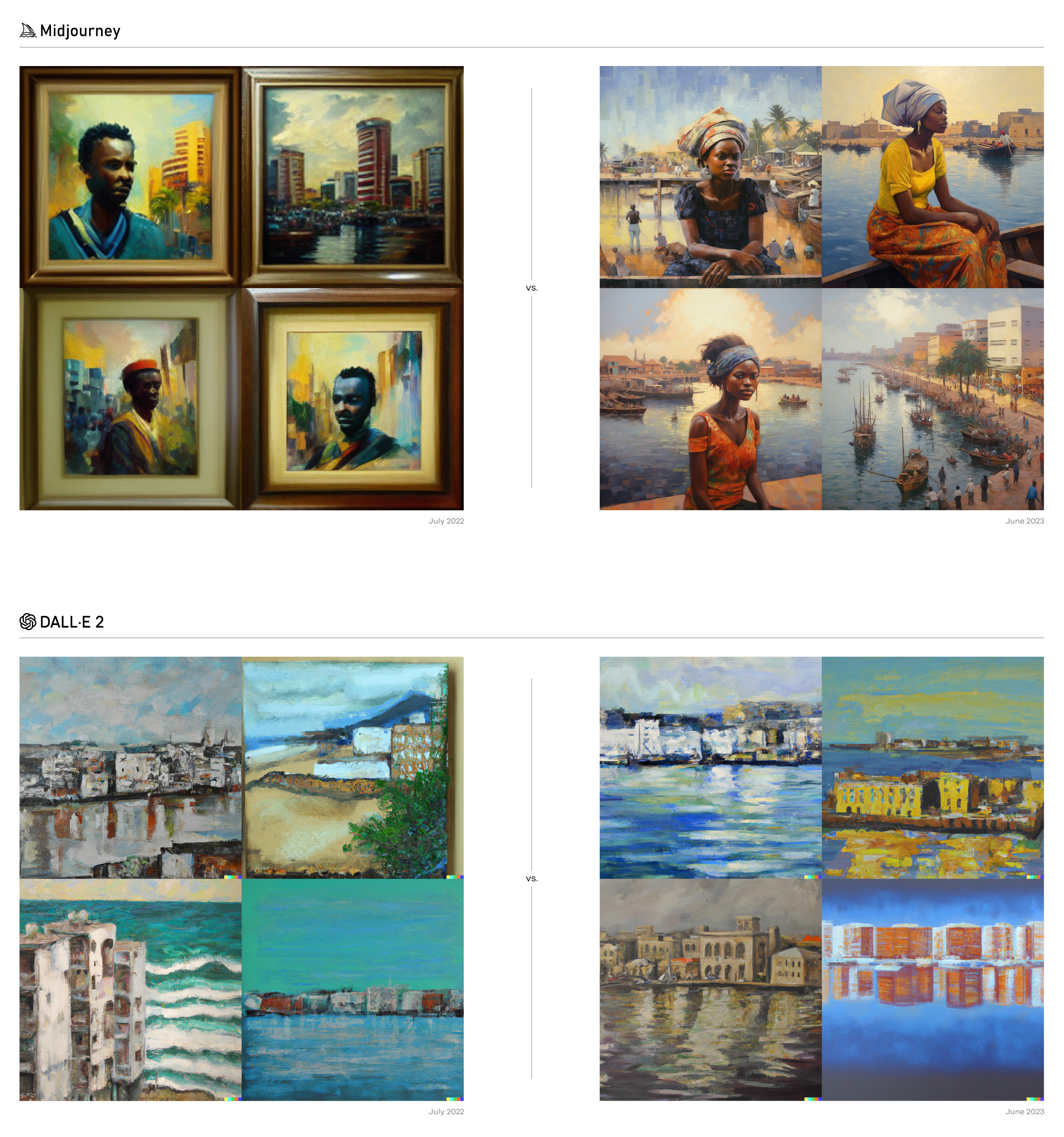

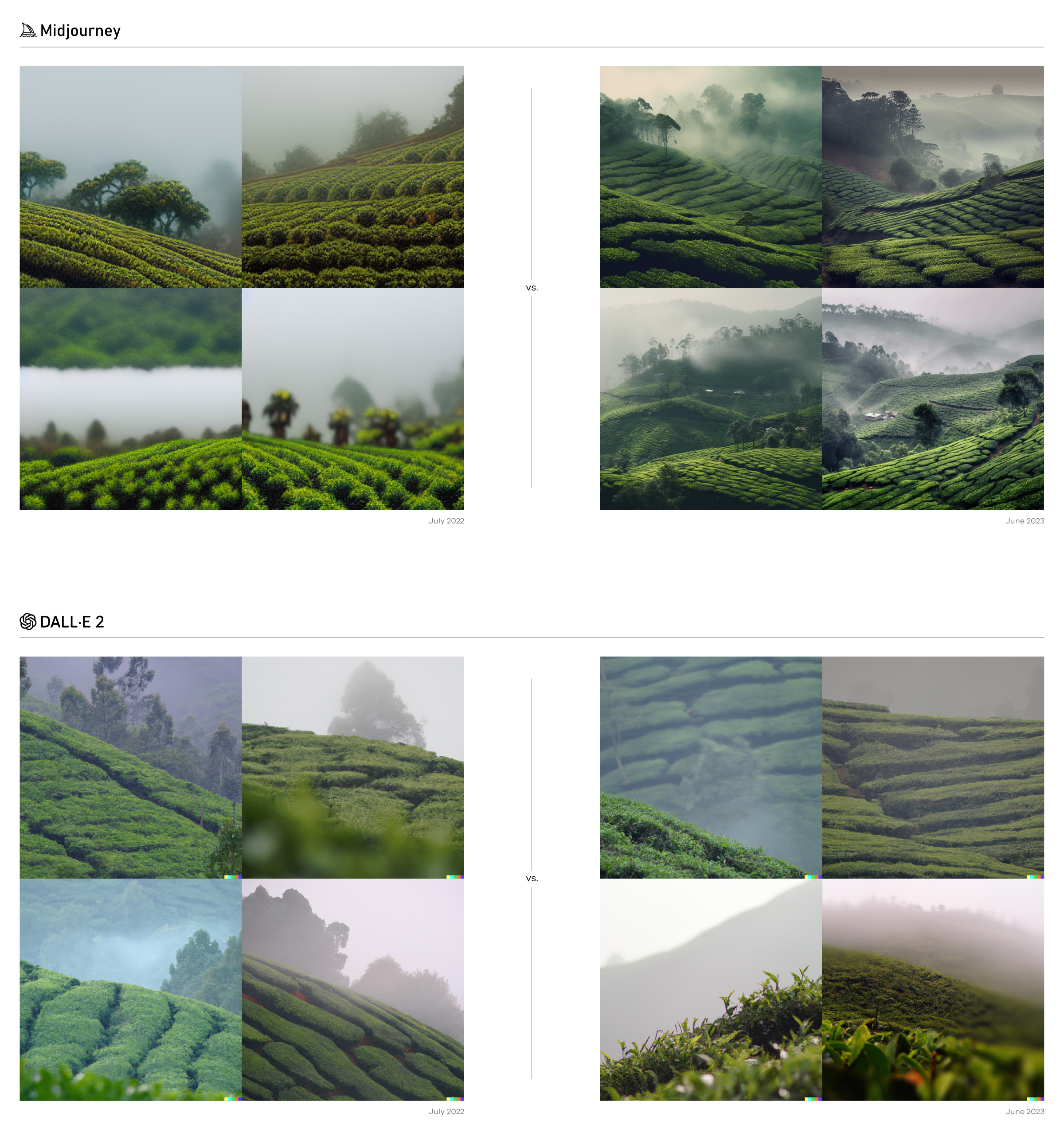

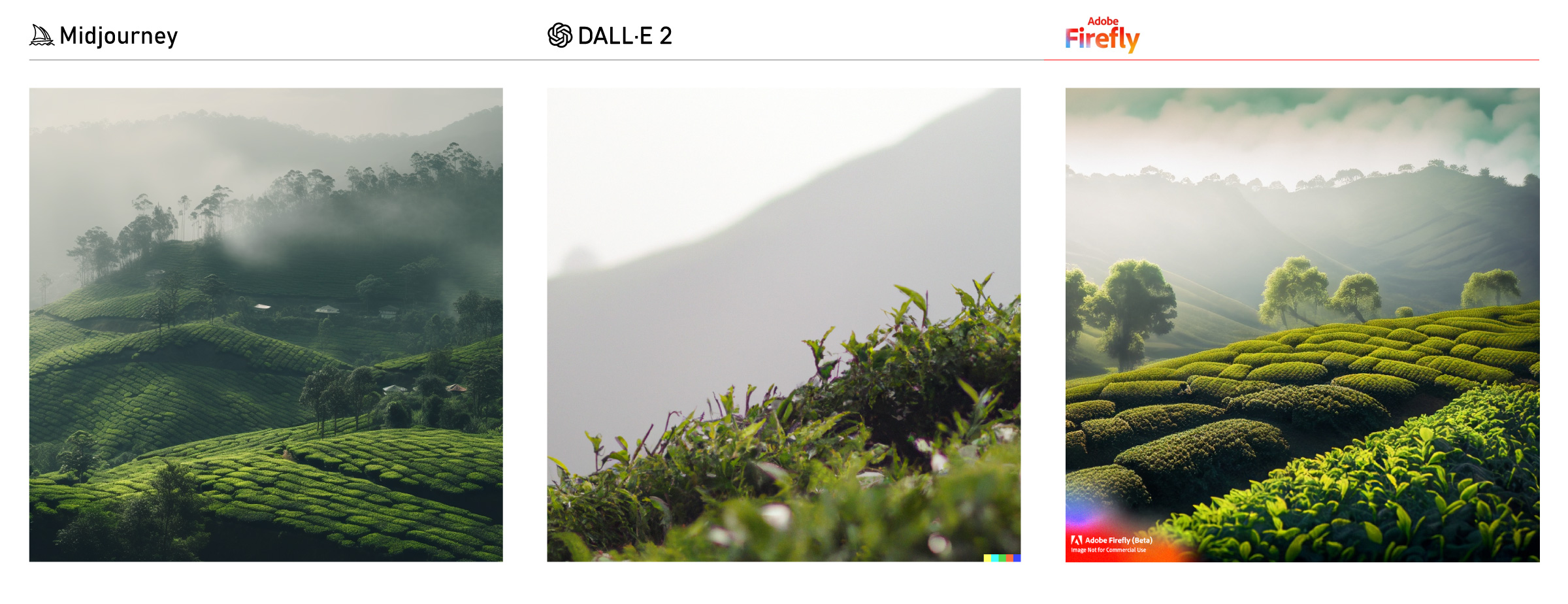

To illustrate this progress, we chose to examine the experiments we conducted last year and compared the results to the current outcomes generated by the same generative engines, utilizing the exact same prompts today.

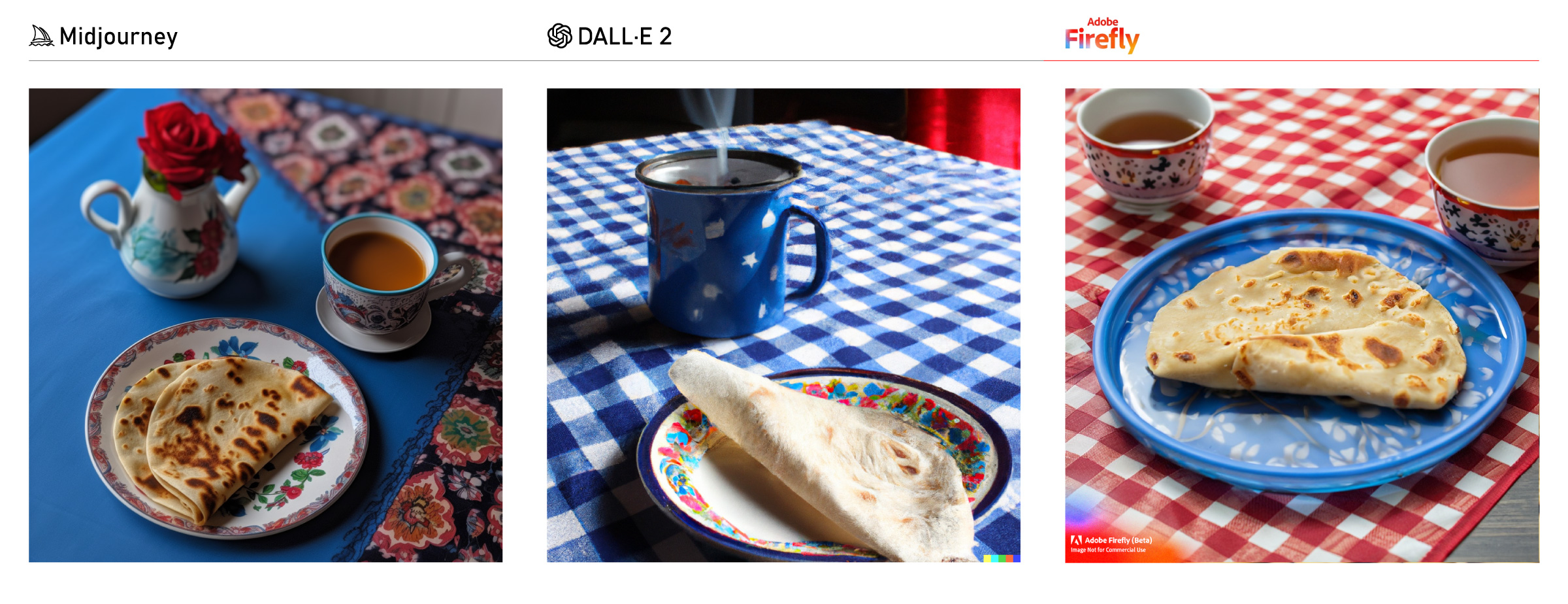

Besides the two OG image generative engines, we’ve also been playing around with a number of others such as Leornado.AI and Adobe Firefly. By comparing these models, we’re able to understand the different technologies that power them and the situations in which they shine best. As part of our 9-months later comparison, we compared the results of the two initial engines we experimented with a newer one, Adobe Firefly.

Insights

So, what have we learned?

As you can see, the delta in less than a year has been nothing short of incredible.

A lot has changed since we published our first case study on generative AI.

Having used these image generative tools for almost 11 months now, here are the major changes we’ve taken note of that have transpired as these tools have evolved.

The Technology

How Does It All Work?

There are countless pieces out there now explained by data scientists and thought leaders in the space, so we don’t want to add to this, but rather simplify and clarify key takeaways relevant to this piece.

Narrow/special models are great at one particular task. For example,

Chat GPT is a transformer that predicts the next word.

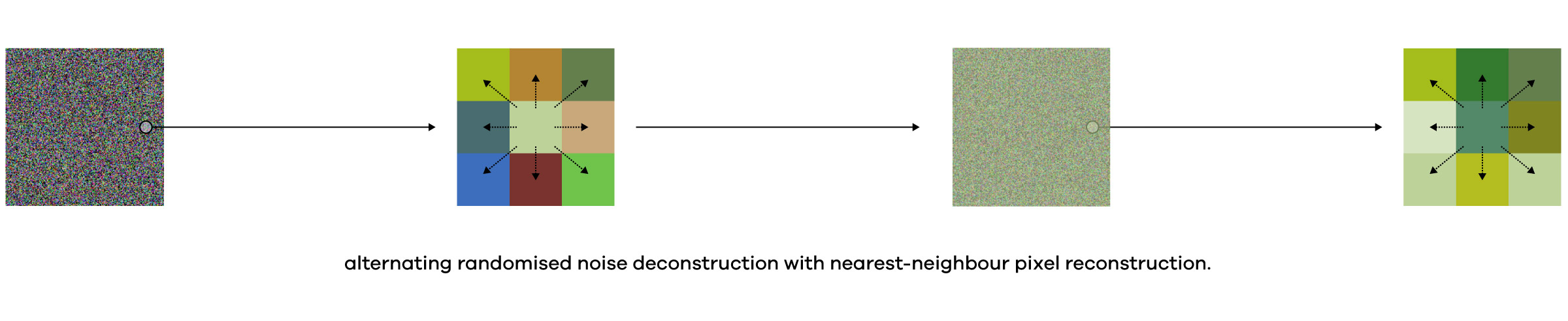

Midjourney is a generative diffuser that predicts the nearest pixel.

Midjourney’s diffusion process: alternating randomised noise deconstruction with nearest-neighbour reconstruction.

- Every image starts with a grid of random visual “noise”, much like the static you see on your old TV when there’s no antenna plugged in.

- The model then incrementally constructs an image that is visually similar to its original training data.

- This is done for every pixel on a grid, by altering the colour values of 8 “nearest neighbour” pixels around it.

- This process of adding noise and reconstructing images is repeated thousands of times for every image generated, until the final results are presented a few seconds later.

- Users can select and upscale their preferred image to a very decent resolution.

- The random noise ensures that every image generated will be unique, and no two images generated will ever be identical, even if the prompt is exactly the same.

For more on how LLMs and Transformers work, we recommend reading this excellent Wolfram Alpha piece. If you’d really like to geek out, Attention is All You Need [PDF] is a great rabbit hole to dive into.

How we’re using AI in strategy-led design.

Compared to 12 months ago in mid 2022, we are using AI a lot more day-to-day at ARK. Out of the more well known LLMs, the two AI tools of choice we use more often are Midjourney and ChatGPT. We don’t find ourselves using Google’s Bard or OpenAI’s Dall•E that much.

We’re not reliant or dependent on AI for anything, but we use it as an assistant to complement some stages in our strategic and creative processes – mostly to enrich conversations and enhance ideas.

In Discovery – We use ChatGPT to accelerate mechanical research breadth and speed (then spend some time fact-checking its otherwise overconfident responses, and going deeper manually – and more personally).

In Strategy – Again using ChatGPT and some 3rd party tools built on its API, we’ve had some mixed success at the business strategy stage of our work. Most responses have been generic and conventional – echoing some non-proprietary early thinking during workshops. What’s great however is they’re produced incredibly fast. It’s a case of more quantity than quality, and given that quantity can help experienced strategists and creators in divergent thinking, there is inherent value in sifting through these to spark unique combinations of ideas we wouldn’t have otherwise come up with that fast.

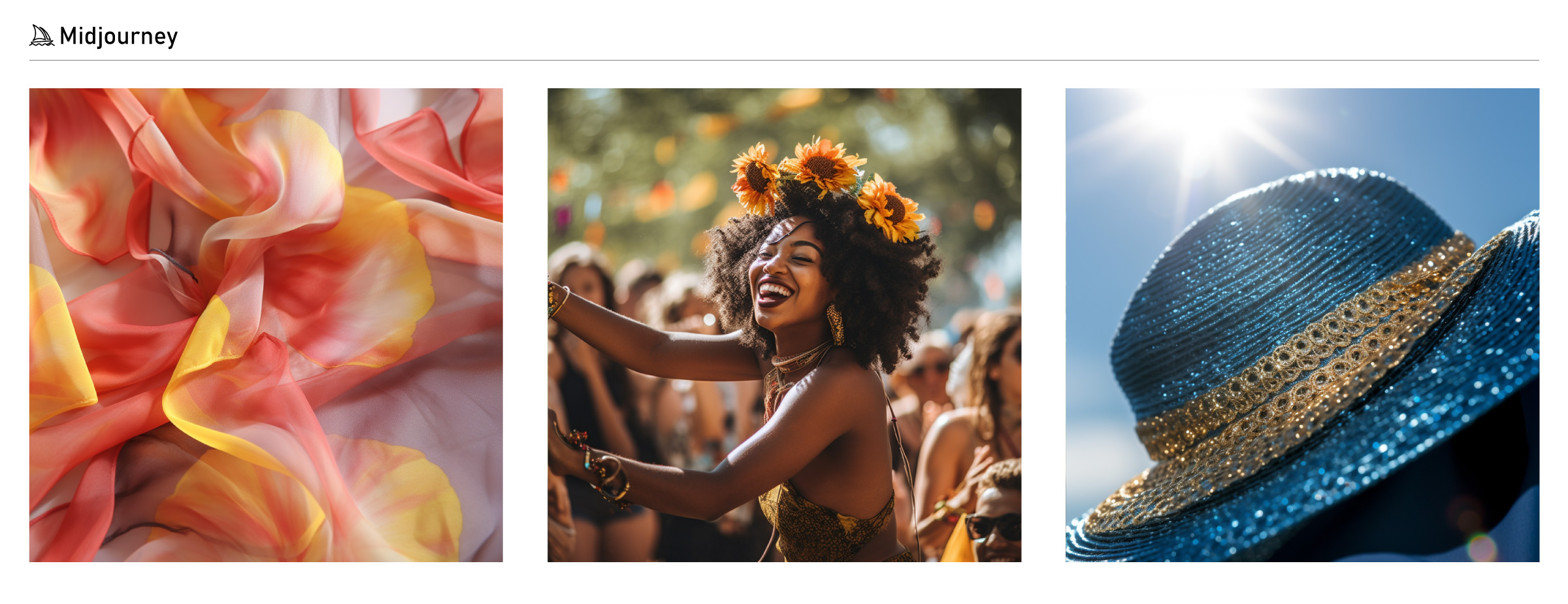

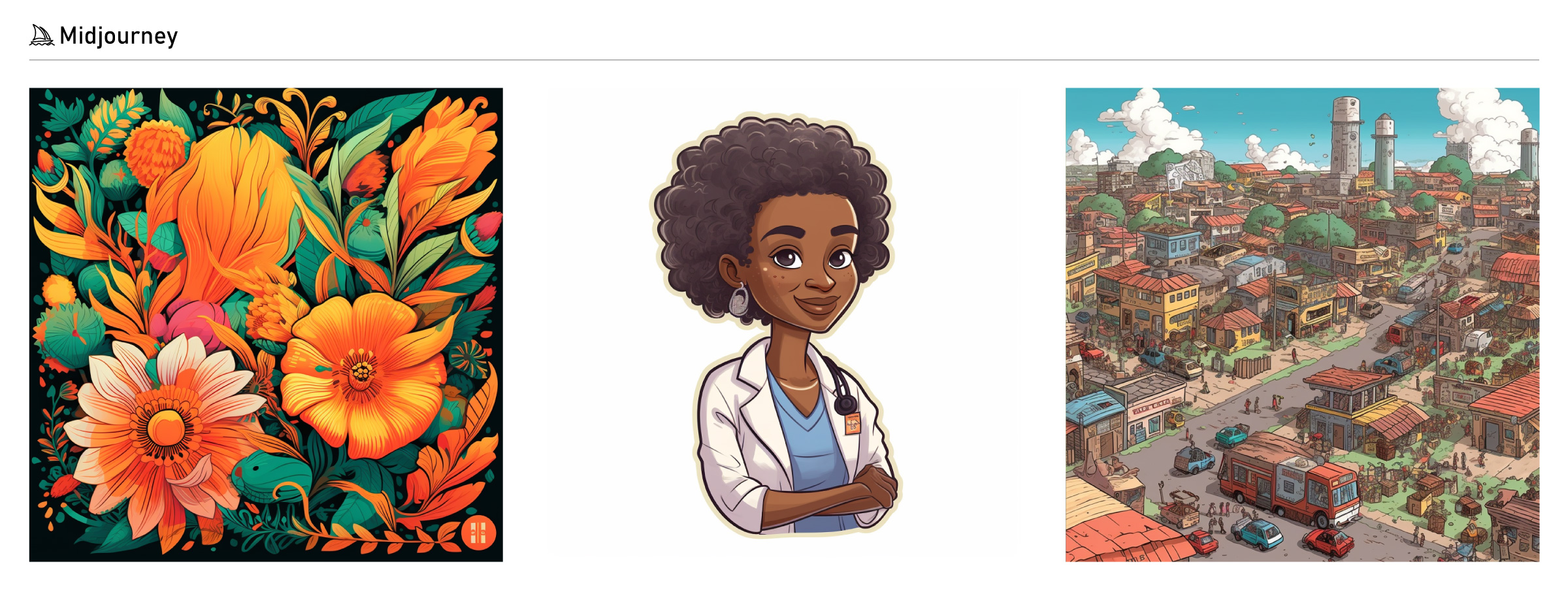

In Creative Direction – By far one of the most interesting ways it helps us today is kick-starting creative sprints. Any artist or creator dreads the “blank canvas” or “writer’s block”. Thanks to Midjourney and ChatGPT, we have a sparring partner to get some early and rough thought starters going to help us spark some divergent ideas, and kick-start some creative processes.

In Design Development – At the tail end of projects, ChatGPT has been a great tool to write some decent copy if prompted right. We also use Midjourney well in moodboarding, simulations and some ideation/concepting, as well as newer Music Generators for some thematic background tracks in simple or complementary multimedia pieces.

As AI accelerates some of these early-stage design phases, it allows our experienced strategic team more time to synthesize, frame, debate, problem-solve and set the right courses of action that resonate with people and business.

How well (or poorly) do they know Africa?

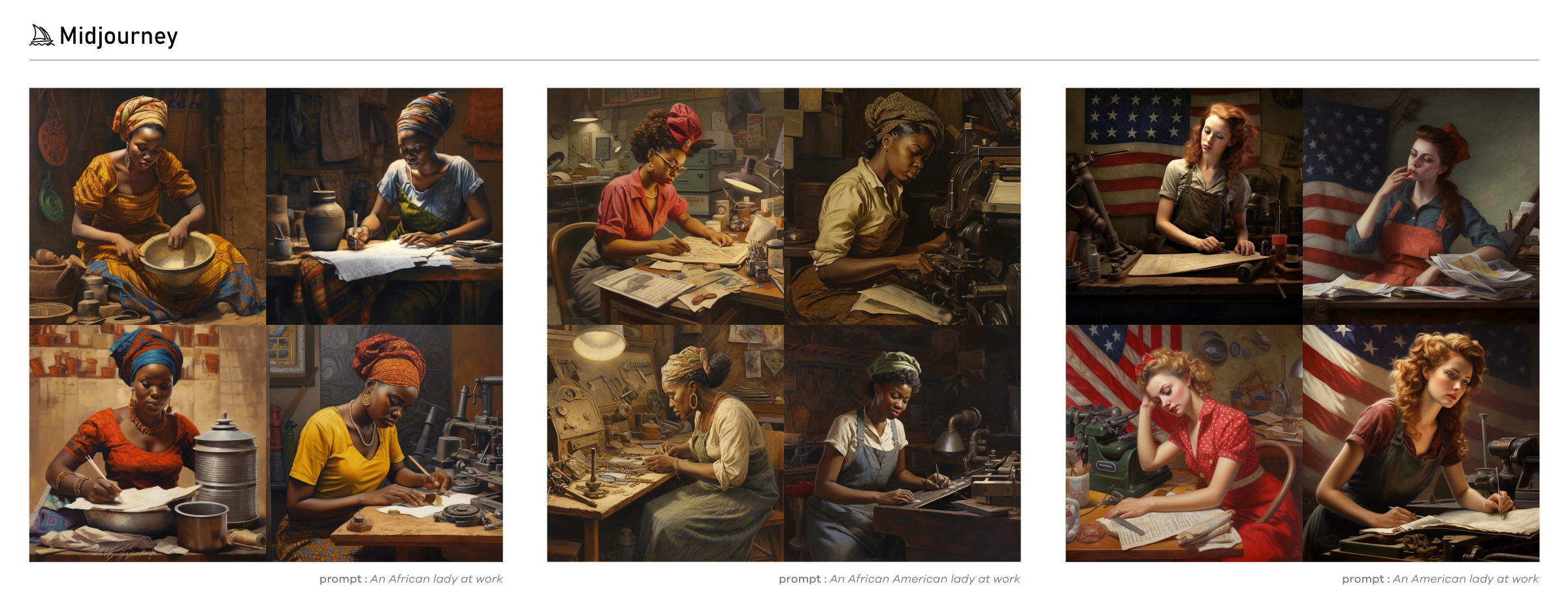

One small but potentially important issue we face today is its representation of African subject matter. As illustrated in the “maasai having a beer with a viking” example, there are perceivable gaps of how people, objects and places are depicted compared to other parts of the world. In Midjourney, we’ll prompt an “Africans at work” vs “African Americans at work” vs “North Americans at work” and you’ll immediately feel a sense of partiality in how the model interprets these prompts based on how it has been trained.

Work in Africa is depicted to look a lot more blue-collar/laborious and in less “prosperous” settings compared to the others. It takes you aback at first, and as much as it is true that some occupational settings in Africa would be typical to this image, is it really an accurate representation of the true representation of an average or typical setting today?

Understandably, the training breadth and depth of digitized subject matter on the African continent is less than that in Europe or the USA.

However, we’re at a point in time where we’re about to be more reliant on these models to inform and create for us. That information draws from what could be huge cognitive biases in the trainers’ views of the world and siloed datasets available to them – an ironic remnant of the colonial era’s selective erasure and suppression of African knowledge. But let’s get back to the subject at hand, for now.

A glimpse into an unknown tomorrow.

Today’s narrow/special models are learning new things (called emerging properties) that they were not trained on. Essentially, they’re becoming a lot more intelligent, much faster than we imagined as they inch towards being artificial general intelligence (AGIs – capable of doing several things very well), which is both exciting in what it can potentially do for us, but equally scarier than comforting in what such (unknown and unexpected) capabilities could unlock if not used cautiously.

Nobody knows what AI will be able to do or where it’s going tomorrow, even those at the forefront of creating them don’t fully understand their inner workings or capabilities.

We shouldn’t be overly guarded about what the machines might do by themselves, but rather what people might do with such capabilities at their fingertips, and whether those people’s value systems are aligned with our collective interest of protecting, improving and enhancing the good of humanity and our custodianship of the planet we inhabit.

The more worrying, and perhaps inevitable scenario – given human nature – would be bad actors using AI to gain more power and extract more commercial gain for their short term capitalist ambition at the expense of humanity’s social good. The ripple effect of this extends beyond furlough, and may well be the genesis of an existential threat to meaningful life as we know it.

What can we do?

“Just add wisdom.”

Recognizing that intelligence is a good thing, and more of it is great, we believe it is best harnessed and shaped collectively and responsibly – ie. with wisdom.

As designers, we’re great at imagining possibilities and creating the future.

Those two little superpowers of ours coupled with wisdom from anthropologists, technologists, ethicists, scientists, policy makers, thinkers and specialists across broad fields, can be used…